Final Exam#

Instructions#

The final examination comprises three primary questions. Ensure to address all the questions by presenting your codes comprehensively and summarizing your solutions, outcomes, and analysis in a clear paragraph at the end of your answers for each question.

All required datasets for the exam are accessible from

demo_data/final.Similar to previous assignments, download the current notebook as your answer sheet and submit both your notebook (

*.ipynb) and the HTML output (*.html) via Moodle.Deadline for Final Submission: 24:00, Sunday, June 9, via Moodle.

Warning

Evaluation will primarily rely on the outputs embedded in the HTML version of your notebook file. Ensure all essential outputs from your code and your text for summarization are clearly formatted in the HTML file.

Tip

If you have any questions regarding the descriptions of the tasks, please send me an email message. I will response promptly.

Question 1 (35%)#

Please download the dataset from demo_data/final/spoken_genre_dataset.csv.

This dataset contains utterances from different speakers, each labeled with its corresponding spoken genre. There are two types of spoken genres: 1 for informative and 0 for entertaining speech.

Your task is to create a series of deep learning based classifiers to effectively predict the spoken genre of each utterance. In your experiments, please do the following:

Remember to randomize the data and split it into train and test sets.

Consider models based on LSTM, bi-directional LSTM, and transformers.

Utilize pre-trained word embeddings in your models.

Fine-tune the hyperparameters for optimal performance.

Compare the performances of all the models created in your experiments.

Perform a qualitative analysis using LIME based on the best-performing classifier.

At the end of your answer, please include a clear paragraph summarizing the entire experimental process and your findings.

Question 2 (35%)#

Please download the dataset from demo_data/final/cmn.txt.

This dataset contains parallel sentence pairs in English and Chinese. Your task is to create a sequence-to-sequence model with attention to effectively translate English text into Chinese. In your experiment, please do the following:

Randomize the data and split it into training and testing sets.

Consider models based on LSTM and attention mechanisms.

Utilize pre-trained word embeddings in your models.

Choose one sentence from the data and illustrate the attention mechanism visually.

Report the model performance on the testing set.

At the end of your answer, please include a clear paragraph summarizing the entire experimental process and your findings.

Question 3 (30%)#

Please download the dataset from demo_data/final/chinse_famous_quotes.txt.

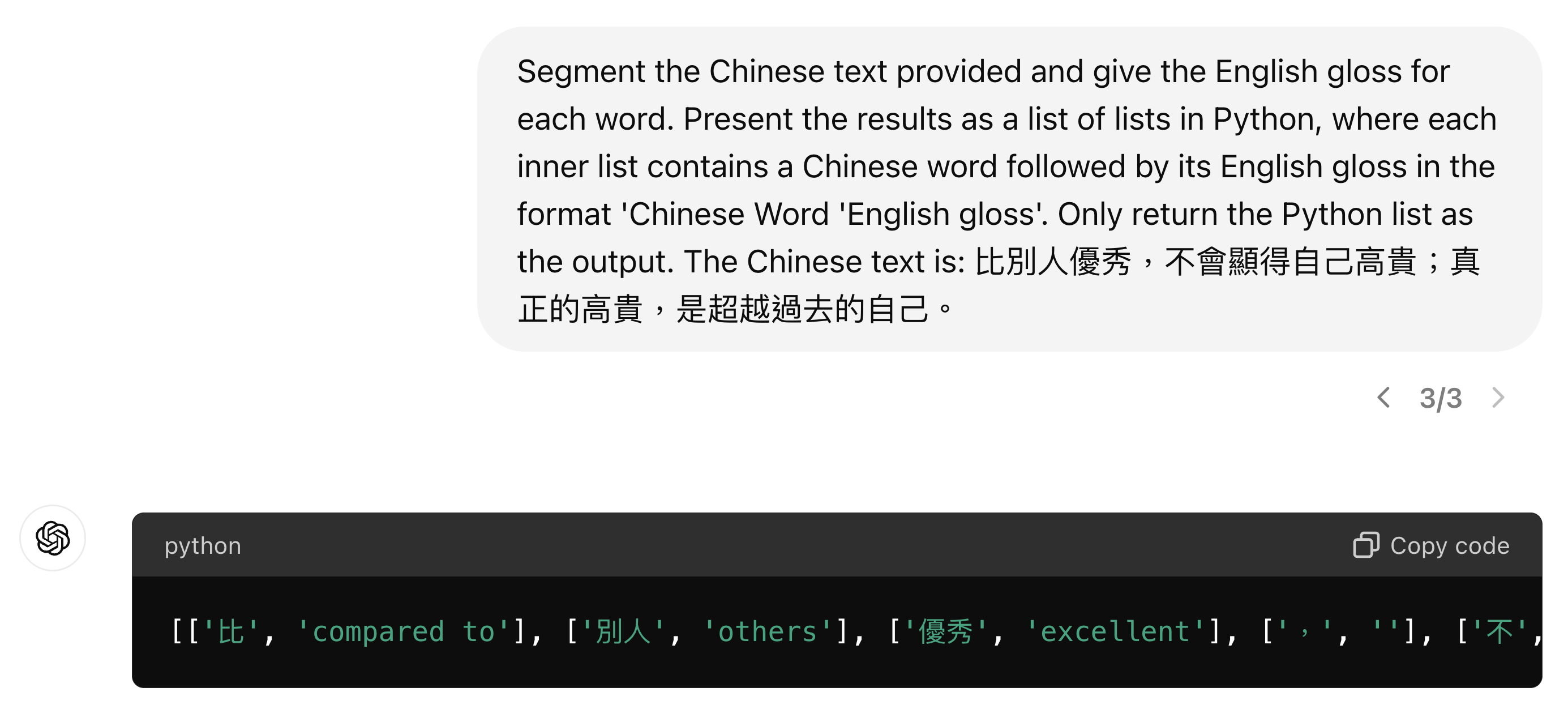

This dataset contains 25 Chinese sentences. Your task is to create a Langchain-based application that utilizes the capabilities of LLMs to accomplish the following linguistic tasks on the dataset:

Tokenize each Chinese sentence into word tokens.

Provide an English gloss for each Chinese word token.

Present the results in the format of an embeded Python list (see below).

Evaluate the performance of the LLM.

At the end of your answer, please include a clear paragraph summarizing the entire experimental process and your findings/observations.

Fig. 1 An example of a ChatGPT prompt and its responses#

[['比', 'compared to'], ['別人', 'others'], ['優秀', 'excellent'], [',', ''], ['不', 'not'], ['會', 'will'], ['顯得', 'appear'], ['自己', 'oneself'], ['高貴', 'noble'], [';', ''], ['真正', 'true'], ['的', ''], ['高貴', 'noble'], [',', ''], ['是', 'is'], ['超越', 'surpass'], ['過去', 'the past'], ['的', ''], ['自己', 'oneself'], ['。', '']]

Important

You have the flexibility to utilize any LLMs accessible to you. Your response won’t be assessed based on the model chosen. Instead, it will be evaluated on the functionality of the code as a potential application.