Chinese Word Segmentation#

Natural language processing methods are connected to the characteristics of the target language.

To deal with the Chinese language, the most intimidating task is to determine a basic linguistic unit to work with.

In this tutorial, we will talk about a few word segmentation methods in python.

Segmentation using jieba#

Before we start, if you haven’t installed the module, please install it as follows:

$ conda activate python-notes

$ pip install jieba

Check the module documentation for more detail:

jieba

import jieba

text = """

高速公路局說,目前在國道3號北向水上系統至中埔路段車多壅塞,已回堵約3公里。另外,國道1號北向仁德至永康路段路段,已回堵約有7公里。建議駕駛人提前避開壅塞路段改道行駛,行經車多路段請保持行車安全距離,小心行駛。

國道車多壅塞路段還有國1內湖-五堵北向路段、楊梅-新竹南向路段;國3三鶯-關西服務區南向路段、快官-霧峰南向路段、水上系統-中埔北向路段;國6霧峰系統-東草屯東向路段、國10燕巢-燕巢系統東向路段。

"""

text_jb = jieba.lcut(text)

print(' | '.join(text_jb))

| 高速公路 | 局說 | , | 目前 | 在 | 國道 | 3 | 號 | 北向 | 水上 | 系統 | 至 | 中埔 | 路段 | 車多 | 壅塞 | , | 已回 | 堵約 | 3 | 公里 | 。 | 另外 | , | 國道 | 1 | 號 | 北向 | 仁德 | 至 | 永康 | 路段 | 路段 | , | 已回 | 堵 | 約 | 有 | 7 | 公里 | 。 | 建議 | 駕駛人 | 提前 | 避開 | 壅塞 | 路段 | 改道 | 行駛 | , | 行經車 | 多 | 路段 | 請 | 保持 | 行車 | 安全 | 距離 | , | 小心 | 行駛 | 。 |

| 國道 | 車多 | 壅塞 | 路段 | 還有國 | 1 | 內湖 | - | 五堵 | 北向 | 路段 | 、 | 楊梅 | - | 新竹 | 南向 | 路段 | ; | 國 | 3 | 三鶯 | - | 關 | 西服 | 務區 | 南向 | 路段 | 、 | 快官 | - | 霧峰 | 南向 | 路段 | 、 | 水上 | 系統 | - | 中埔 | 北向 | 路段 | ; | 國 | 6 | 霧峰 | 系統 | - | 東 | 草屯 | 東向 | 路段 | 、 | 國 | 10 | 燕巢 | - | 燕巢 | 系統 | 東向 | 路段 | 。 |

Initialize traditional Chinese dictionary

Download the traditional chinese dictionary from

jieba-tw

jieba.set_dictionary(file_path)

Add own project-specific dictionary

jieba.load_userdict(file_path)

Note

For the user-defined dictionary, it should be a txt file, where each line includes a new word, its frequency (optional), and POS tags (optional). The word’s values are separated by white-spaces.

Add ad-hoc words to dictionary

jieba.add_word(word, freq=None, tag=None)

Remove words

jieba.del_word(word)

Word segmentation

jieba.cut()returns ageneratorobjectjieba.lcut()resuts aListobject

# full

jieba.cut(TEXT, cut_all=True)

jieba.lcut(TEXT, cut_all=True)

# default

jieba.cut(TEXT, cut_all=False)

jieba.lcut(TEXT, cut_all=False)

# `cut_all`: True for full pattern; False for accurate pattern.

print('|'.join(jieba.cut(text, cut_all=False))) ## default accurate pattern

print('|'.join(jieba.cut(text, cut_all=True))) ## full pattern

|高速公路|局說|,|目前|在|國道|3|號|北向|水上|系統|至|中埔|路段|車多|壅塞|,|已回|堵約|3|公里|。|另外|,|國道|1|號|北向|仁德|至|永康|路段|路段|,|已回|堵|約|有|7|公里|。|建議|駕駛人|提前|避開|壅塞|路段|改道|行駛|,|行經車|多|路段|請|保持|行車|安全|距離|,|小心|行駛|。|

|國道|車多|壅塞|路段|還有國|1|內湖|-|五堵|北向|路段|、|楊梅|-|新竹|南向|路段|;|國|3|三鶯|-|關|西服|務區|南向|路段|、|快官|-|霧峰|南向|路段|、|水上|系統|-|中埔|北向|路段|;|國|6|霧峰|系統|-|東|草屯|東向|路段|、|國|10|燕巢|-|燕巢|系統|東向|路段|。|

|

||高速|高速公路|公路|公路局|路局|說|,|目前|在|國|道|3|號|北向|水上|系|統|至|中|埔|路段|車|多|壅塞|,|已|回|堵|約|3|公里|。|另外|,|國|道|1|號|北向|仁德|至|永康|康路|路段|路段|,|已|回|堵|約|有|7|公里|。|建|議|駕|駛|人|提前|避|開|壅塞|路段|改道|道行|駛|,|行|經|車|多路|路段|請|保持|行|車|安全|全距|離|,|小心|行|駛|。|

||國|道|車|多|壅塞|路段|還|有|國|1|內|湖|-|五堵|北向|路段|、|楊|梅|-|新竹|竹南|南向|路段|;|國|3|三|鶯|-|關|西服|務|區|南向|路段|、|快|官|-|霧|峰|南向|路段|、|水上|系|統|-|中|埔|北向|路段|;|國|6|霧|峰|系|統|-|東|草屯|東|向|路段|、|國|10|燕巢|-|燕巢|系|統|東|向|路段|。|

|

See also

In the jieba.cut() function of the Jieba Python library, the cut_all parameter determines whether to use the full mode or the accurate mode for word segmentation.

Here’s what each mode means:

Full Mode (

cut_all=True):In full mode, Jieba attempts to segment the text into all possible words and returns a list of all possible word combinations, regardless of whether they are valid words or not.

This mode is more aggressive and may result in a larger number of word segments, including many short and meaningless combinations.

It’s useful when dealing with text containing colloquial language, slang, or incomplete sentences, as it tries to segment the text into as many words as possible.

Accurate Mode (

cut_all=Falseor not specifyingcut_all):In accurate mode, Jieba uses a more precise algorithm to segment the text into meaningful words based on a predefined dictionary of words.

This mode is more conservative and typically results in fewer word segments compared to the full mode.

It’s suitable for general-purpose text segmentation tasks where accurate word segmentation is desired.

Segmentation using CKIP Transformer#

For more detail on the installation of

ckip-transformers, please read their documentation.

pip install -U ckip-transformers

## Colab Only

!pip install -U ckip-transformers

Collecting ckip-transformers

Downloading ckip_transformers-0.3.4-py3-none-any.whl (26 kB)

Requirement already satisfied: torch>=1.5.0 in /usr/local/lib/python3.10/dist-packages (from ckip-transformers) (2.1.0+cu121)

Requirement already satisfied: tqdm>=4.27 in /usr/local/lib/python3.10/dist-packages (from ckip-transformers) (4.66.2)

Requirement already satisfied: transformers>=3.5.0 in /usr/local/lib/python3.10/dist-packages (from ckip-transformers) (4.37.2)

Requirement already satisfied: filelock in /usr/local/lib/python3.10/dist-packages (from torch>=1.5.0->ckip-transformers) (3.13.1)

Requirement already satisfied: typing-extensions in /usr/local/lib/python3.10/dist-packages (from torch>=1.5.0->ckip-transformers) (4.9.0)

Requirement already satisfied: sympy in /usr/local/lib/python3.10/dist-packages (from torch>=1.5.0->ckip-transformers) (1.12)

Requirement already satisfied: networkx in /usr/local/lib/python3.10/dist-packages (from torch>=1.5.0->ckip-transformers) (3.2.1)

Requirement already satisfied: jinja2 in /usr/local/lib/python3.10/dist-packages (from torch>=1.5.0->ckip-transformers) (3.1.3)

Requirement already satisfied: fsspec in /usr/local/lib/python3.10/dist-packages (from torch>=1.5.0->ckip-transformers) (2023.6.0)

Requirement already satisfied: triton==2.1.0 in /usr/local/lib/python3.10/dist-packages (from torch>=1.5.0->ckip-transformers) (2.1.0)

Requirement already satisfied: huggingface-hub<1.0,>=0.19.3 in /usr/local/lib/python3.10/dist-packages (from transformers>=3.5.0->ckip-transformers) (0.20.3)

Requirement already satisfied: numpy>=1.17 in /usr/local/lib/python3.10/dist-packages (from transformers>=3.5.0->ckip-transformers) (1.25.2)

Requirement already satisfied: packaging>=20.0 in /usr/local/lib/python3.10/dist-packages (from transformers>=3.5.0->ckip-transformers) (23.2)

Requirement already satisfied: pyyaml>=5.1 in /usr/local/lib/python3.10/dist-packages (from transformers>=3.5.0->ckip-transformers) (6.0.1)

Requirement already satisfied: regex!=2019.12.17 in /usr/local/lib/python3.10/dist-packages (from transformers>=3.5.0->ckip-transformers) (2023.12.25)

Requirement already satisfied: requests in /usr/local/lib/python3.10/dist-packages (from transformers>=3.5.0->ckip-transformers) (2.31.0)

Requirement already satisfied: tokenizers<0.19,>=0.14 in /usr/local/lib/python3.10/dist-packages (from transformers>=3.5.0->ckip-transformers) (0.15.2)

Requirement already satisfied: safetensors>=0.4.1 in /usr/local/lib/python3.10/dist-packages (from transformers>=3.5.0->ckip-transformers) (0.4.2)

Requirement already satisfied: MarkupSafe>=2.0 in /usr/local/lib/python3.10/dist-packages (from jinja2->torch>=1.5.0->ckip-transformers) (2.1.5)

Requirement already satisfied: charset-normalizer<4,>=2 in /usr/local/lib/python3.10/dist-packages (from requests->transformers>=3.5.0->ckip-transformers) (3.3.2)

Requirement already satisfied: idna<4,>=2.5 in /usr/local/lib/python3.10/dist-packages (from requests->transformers>=3.5.0->ckip-transformers) (3.6)

Requirement already satisfied: urllib3<3,>=1.21.1 in /usr/local/lib/python3.10/dist-packages (from requests->transformers>=3.5.0->ckip-transformers) (2.0.7)

Requirement already satisfied: certifi>=2017.4.17 in /usr/local/lib/python3.10/dist-packages (from requests->transformers>=3.5.0->ckip-transformers) (2024.2.2)

Requirement already satisfied: mpmath>=0.19 in /usr/local/lib/python3.10/dist-packages (from sympy->torch>=1.5.0->ckip-transformers) (1.3.0)

Installing collected packages: ckip-transformers

Successfully installed ckip-transformers-0.3.4

import ckip_transformers

from ckip_transformers.nlp import CkipWordSegmenter, CkipPosTagger, CkipNerChunker

When initializing the models,

levelspecifies three levels of segmentation resolution. Level 1 is the fastest while Level 3 is the most accuratedevice = 0for GPU computing.

%%time

# Initialize drivers

ws_driver = CkipWordSegmenter(model="bert-base", device=0)

pos_driver = CkipPosTagger(model="bert-base", device=0)

ner_driver = CkipNerChunker(model="bert-base", device=0)

/usr/local/lib/python3.10/dist-packages/huggingface_hub/utils/_token.py:88: UserWarning:

The secret `HF_TOKEN` does not exist in your Colab secrets.

To authenticate with the Hugging Face Hub, create a token in your settings tab (https://huggingface.co/settings/tokens), set it as secret in your Google Colab and restart your session.

You will be able to reuse this secret in all of your notebooks.

Please note that authentication is recommended but still optional to access public models or datasets.

warnings.warn(

/usr/local/lib/python3.10/dist-packages/torch/_utils.py:831: UserWarning: TypedStorage is deprecated. It will be removed in the future and UntypedStorage will be the only storage class. This should only matter to you if you are using storages directly. To access UntypedStorage directly, use tensor.untyped_storage() instead of tensor.storage()

return self.fget.__get__(instance, owner)()

CPU times: user 3.59 s, sys: 3.43 s, total: 7.02 s

Wall time: 22.2 s

We usually break the texts into smaller chunks for word segmentation.

But how we break the texts can be tricky.

Paragraph breaks?

Chunks based on period-like punctuations?

Chunks based on some other delimiters?

# Input text

text = """

高速公路局說,目前在國道3號北向水上系統至中埔路段車多壅塞,已回堵約3公里。另外,國道1號北向仁德至永康路段路段,已回堵約有7公里。建議駕駛人提前避開壅塞路段改道行駛,行經車多路段請保持行車安全距離,小心行駛。

國道車多壅塞路段還有國1內湖-五堵北向路段、楊梅-新竹南向路段;國3三鶯-關西服務區南向路段、快官-霧峰南向路段、水上系統-中埔北向路段;國6霧峰系統-東草屯東向路段、國10燕巢-燕巢系統東向路段。

"""

# paragraph breaks

text = [p for p in text.split('\n') if len(p) != 0]

print(text)

['高速公路局說,目前在國道3號北向水上系統至中埔路段車多壅塞,已回堵約3公里。另外,國道1號北向仁德至永康路段路段,已回堵約有7公里。建議駕駛人提前避開壅塞路段改道行駛,行經車多路段請保持行車安全距離,小心行駛。', '國道車多壅塞路段還有國1內湖-五堵北向路段、楊梅-新竹南向路段;國3三鶯-關西服務區南向路段、快官-霧峰南向路段、水上系統-中埔北向路段;國6霧峰系統-東草屯東向路段、國10燕巢-燕巢系統東向路段。']

# Run pipeline

ws = ws_driver(text)

pos = pos_driver(ws)

ner = ner_driver(text)

Tokenization: 100%|██████████| 2/2 [00:00<00:00, 1053.85it/s]

Inference: 100%|██████████| 1/1 [00:01<00:00, 1.68s/it]

Tokenization: 100%|██████████| 2/2 [00:00<00:00, 3837.42it/s]

Inference: 100%|██████████| 1/1 [00:00<00:00, 22.82it/s]

Tokenization: 100%|██████████| 2/2 [00:00<00:00, 1839.20it/s]

Inference: 100%|██████████| 1/1 [00:00<00:00, 36.79it/s]

When doing the word segmentation, there are a few parameters to consider in

ws_driver():use_delim: by default, ckip transformer breaks the texts into sentences using the following delimiters',,。::;;!!??', and concatenate them back in the outputs.delim_set: to specify self-defined sentence delimitersbatch_sizemax_length

# Enable sentence segmentation

ws = ws_driver(text, use_delim=True)

ner = ner_driver(text, use_delim=True)

Tokenization: 100%|██████████| 2/2 [00:00<00:00, 1773.49it/s]

Inference: 100%|██████████| 1/1 [00:00<00:00, 22.89it/s]

Tokenization: 100%|██████████| 2/2 [00:00<00:00, 1828.38it/s]

Inference: 100%|██████████| 1/1 [00:00<00:00, 23.02it/s]

# Disable sentence segmentation

pos = pos_driver(ws, use_delim=False)

# Use new line characters and tabs for sentence segmentation

pos = pos_driver(ws, delim_set='\n\t')

Tokenization: 100%|██████████| 2/2 [00:00<00:00, 1971.01it/s]

Inference: 100%|██████████| 1/1 [00:00<00:00, 46.98it/s]

Tokenization: 100%|██████████| 2/2 [00:00<00:00, 1895.30it/s]

Inference: 100%|██████████| 1/1 [00:00<00:00, 43.99it/s]

A quick comparison of the results based on

jiebaandckip-transomers:

print(' | '.join(text_jb))

| 高速公路 | 局說 | , | 目前 | 在 | 國道 | 3 | 號 | 北向 | 水上 | 系統 | 至 | 中埔 | 路段 | 車多 | 壅塞 | , | 已回 | 堵約 | 3 | 公里 | 。 | 另外 | , | 國道 | 1 | 號 | 北向 | 仁德 | 至 | 永康 | 路段 | 路段 | , | 已回 | 堵 | 約 | 有 | 7 | 公里 | 。 | 建議 | 駕駛人 | 提前 | 避開 | 壅塞 | 路段 | 改道 | 行駛 | , | 行經車 | 多 | 路段 | 請 | 保持 | 行車 | 安全 | 距離 | , | 小心 | 行駛 | 。 |

| 國道 | 車多 | 壅塞 | 路段 | 還有國 | 1 | 內湖 | - | 五堵 | 北向 | 路段 | 、 | 楊梅 | - | 新竹 | 南向 | 路段 | ; | 國 | 3 | 三鶯 | - | 關 | 西服 | 務區 | 南向 | 路段 | 、 | 快官 | - | 霧峰 | 南向 | 路段 | 、 | 水上 | 系統 | - | 中埔 | 北向 | 路段 | ; | 國 | 6 | 霧峰 | 系統 | - | 東 | 草屯 | 東向 | 路段 | 、 | 國 | 10 | 燕巢 | - | 燕巢 | 系統 | 東向 | 路段 | 。 |

print('\n\n'.join([' | '.join(p) for p in ws]))

高速 | 公路局 | 說 | , | 目前 | 在 | 國道 | 3號 | 北向 | 水上 | 系統 | 至 | 中埔 | 路段 | 車 | 多 | 壅塞 | , | 已 | 回堵 | 約 | 3 | 公里 | 。 | 另外 | , | 國道 | 1號 | 北 | 向 | 仁德 | 至 | 永康 | 路段 | 路段 | , | 已 | 回堵 | 約 | 有 | 7 | 公里 | 。 | 建議 | 駕駛人 | 提前 | 避開 | 壅塞 | 路段 | 改道 | 行駛 | , | 行經 | 車 | 多 | 路段 | 請 | 保持 | 行車 | 安全 | 距離 | , | 小心 | 行駛 | 。

國道 | 車 | 多 | 壅塞 | 路段 | 還 | 有 | 國1 | 內湖 | - | 五堵 | 北向 | 路段 | 、 | 楊梅 | - | 新竹 | 南向 | 路段 | ; | 國3 | 三鶯 | - | 關西 | 服務區 | 南向 | 路段 | 、 | 快官 | - | 霧峰 | 南向 | 路段 | 、 | 水上 | 系統 | - | 中埔 | 北向 | 路段 | ; | 國6 | 霧峰 | 系統 | - | 東 | 草屯 | 東向 | 路段 | 、 | 國10 | 燕巢 | - | 燕巢 | 系統 | 東向 | 路段 | 。

# Pack word segmentation and part-of-speech results

def pack_ws_pos_sentece(sentence_ws, sentence_pos):

assert len(sentence_ws) == len(sentence_pos)

res = []

for word_ws, word_pos in zip(sentence_ws, sentence_pos):

res.append(f'{word_ws}({word_pos})')

return '\u3000'.join(res)

# Show results

for sentence, sentence_ws, sentence_pos, sentence_ner in zip(

text, ws, pos, ner):

print(sentence)

print(pack_ws_pos_sentece(sentence_ws, sentence_pos))

for entity in sentence_ner:

print(entity)

print()

高速公路局說,目前在國道3號北向水上系統至中埔路段車多壅塞,已回堵約3公里。另外,國道1號北向仁德至永康路段路段,已回堵約有7公里。建議駕駛人提前避開壅塞路段改道行駛,行經車多路段請保持行車安全距離,小心行駛。

高速(VH) 公路局(Nc) 說(VE) ,(COMMACATEGORY) 目前(Nd) 在(P) 國道(Nc) 3號(Neu) 北向(Nc) 水上(Nc) 系統(Na) 至(Caa) 中埔(Nc) 路段(Na) 車(Na) 多(D) 壅塞(VH) ,(COMMACATEGORY) 已(D) 回堵(VC) 約(Da) 3(Neu) 公里(Nf) 。(PERIODCATEGORY) 另外(Cbb) ,(COMMACATEGORY) 國道(Nc) 1號(Nc) 北(Ncd) 向(P) 仁德(Nc) 至(Caa) 永康(Nc) 路段(Na) 路段(Na) ,(COMMACATEGORY) 已(D) 回堵(VC) 約(Da) 有(V_2) 7(Neu) 公里(Nf) 。(PERIODCATEGORY) 建議(VE) 駕駛人(Na) 提前(VB) 避開(VC) 壅塞(VH) 路段(Na) 改道(VA) 行駛(VA) ,(COMMACATEGORY) 行經(VCL) 車(Na) 多(VH) 路段(Na) 請(VF) 保持(VJ) 行車(VA) 安全(VH) 距離(Na) ,(COMMACATEGORY) 小心(VK) 行駛(VA) 。(PERIODCATEGORY)

NerToken(word='高速公路局', ner='ORG', idx=(0, 5))

NerToken(word='國道3號', ner='FAC', idx=(10, 14))

NerToken(word='中埔', ner='GPE', idx=(21, 23))

NerToken(word='3公里', ner='QUANTITY', idx=(34, 37))

NerToken(word='國道1號', ner='FAC', idx=(41, 45))

NerToken(word='仁德', ner='GPE', idx=(47, 49))

NerToken(word='永康路段', ner='FAC', idx=(50, 54))

NerToken(word='7公里', ner='QUANTITY', idx=(62, 65))

國道車多壅塞路段還有國1內湖-五堵北向路段、楊梅-新竹南向路段;國3三鶯-關西服務區南向路段、快官-霧峰南向路段、水上系統-中埔北向路段;國6霧峰系統-東草屯東向路段、國10燕巢-燕巢系統東向路段。

國道(Na) 車(Na) 多(VH) 壅塞(VH) 路段(Na) 還(D) 有(V_2) 國1(Na) 內湖(Nc) -(DASHCATEGORY) 五堵(Nc) 北向(Na) 路段(Na) 、(PAUSECATEGORY) 楊梅(Nc) -(DASHCATEGORY) 新竹(Nc) 南向(Na) 路段(Na) ;(SEMICOLONCATEGORY) 國3(Na) 三鶯(Nc) -(DASHCATEGORY) 關西(Nc) 服務區(Nc) 南向(A) 路段(Na) 、(PAUSECATEGORY) 快官(Na) -(DASHCATEGORY) 霧峰(Nc) 南向(Nc) 路段(Na) 、(PAUSECATEGORY) 水上(Nc) 系統(Na) -(DASHCATEGORY) 中埔(Nc) 北向(Na) 路段(Na) ;(SEMICOLONCATEGORY) 國6(Na) 霧峰(Nc) 系統(Na) -(DASHCATEGORY) 東(Ncd) 草屯(Nc) 東向(Na) 路段(Na) 、(PAUSECATEGORY) 國10(Na) 燕巢(Nc) -(DASHCATEGORY) 燕巢(Nc) 系統(Na) 東向(VH) 路段(Na) 。(PERIODCATEGORY)

NerToken(word='國道', ner='FAC', idx=(0, 2))

NerToken(word='國3三鶯-關西服務區', ner='FAC', idx=(32, 42))

NerToken(word='中埔', ner='GPE', idx=(62, 64))

NerToken(word='國6霧峰系統', ner='FAC', idx=(69, 75))

NerToken(word='東草屯東向路段', ner='FAC', idx=(76, 83))

NerToken(word='國10燕巢-燕巢系統', ner='FAC', idx=(84, 94))

Challenges of Word Segmentation#

text2 = [

"女人沒有她男人甚麼也不是",

"女人沒有了男人將會一無所有",

"下雨天留客天留我不留",

"行路人等不得在此大小便",

"兒的生活好痛苦一點也沒有糧食多病少掙了很多錢",

]

ws2 = ws_driver(text2)

pos2 = pos_driver(ws2)

ner2 = ner_driver(text2)

Tokenization: 100%|██████████| 5/5 [00:00<00:00, 17848.10it/s]

Inference: 100%|██████████| 1/1 [00:00<00:00, 41.78it/s]

Tokenization: 100%|██████████| 5/5 [00:00<00:00, 19765.81it/s]

Inference: 100%|██████████| 1/1 [00:00<00:00, 66.31it/s]

Tokenization: 100%|██████████| 5/5 [00:00<00:00, 18094.50it/s]

Inference: 100%|██████████| 1/1 [00:00<00:00, 59.80it/s]

# Show results

for sentence, sentence_ws, sentence_pos, sentence_ner in zip(

text2, ws2, pos2, ner2):

print(sentence)

print(pack_ws_pos_sentece(sentence_ws, sentence_pos))

for entity in sentence_ner:

print(entity)

print()

女人沒有她男人甚麼也不是

女人(Na) 沒有(VJ) 她(Nh) 男人(Na) 甚麼(Nep) 也(D) 不(D) 是(SHI)

女人沒有了男人將會一無所有

女人(Na) 沒有(VJ) 了(Di) 男人(Na) 將(D) 會(D) 一無所有(VH)

下雨天留客天留我不留

下雨天(Nd) 留(VC) 客(Na) 天(Na) 留(VC) 我(Nh) 不(D) 留(VC)

行路人等不得在此大小便

行路人(Na) 等(Cab) 不得(D) 在(P) 此(Nep) 大小便(VA)

兒的生活好痛苦一點也沒有糧食多病少掙了很多錢

兒(Na) 的(DE) 生活(Na) 好(Dfa) 痛苦(VH) 一點(Neqa) 也(D) 沒有(VJ) 糧食(Na) 多(VH) 病(Na) 少(VH) 掙(VC) 了(Di) 很多(Neqa) 錢(Na)

Tip

It is still not clear to me how we can include user-defined dictionary in the ckip-transformers. This may be a problem to all deep-learning based segmenters I think.

However, I know that it is possible to use self-defined dictionary in the ckiptagger.

If you know where and how to incorporate self-defined dictionary in the ckip-transformers, please let me know. Thanks!

Other Modules for Chinese Word Segmentation#

You can try other modules and check their segmentation results and qualities:

ckiptagger(Probably the predecessor ofckip-transformers?)

When you play with other segmenters, please note a few important issues for determining the segmenter for your project:

Most of the segmenters were trained based on the simplified Chinese.

It is important to know if the segmenter allows users to add self-defined dictionary to improve segmentation performance.

The documentation of the tagsets (i.e., POS, NER) needs to be well-maintained so that users can easily utilize the segmentation results for later downstream projects.

Chinese NLP Using spacy#

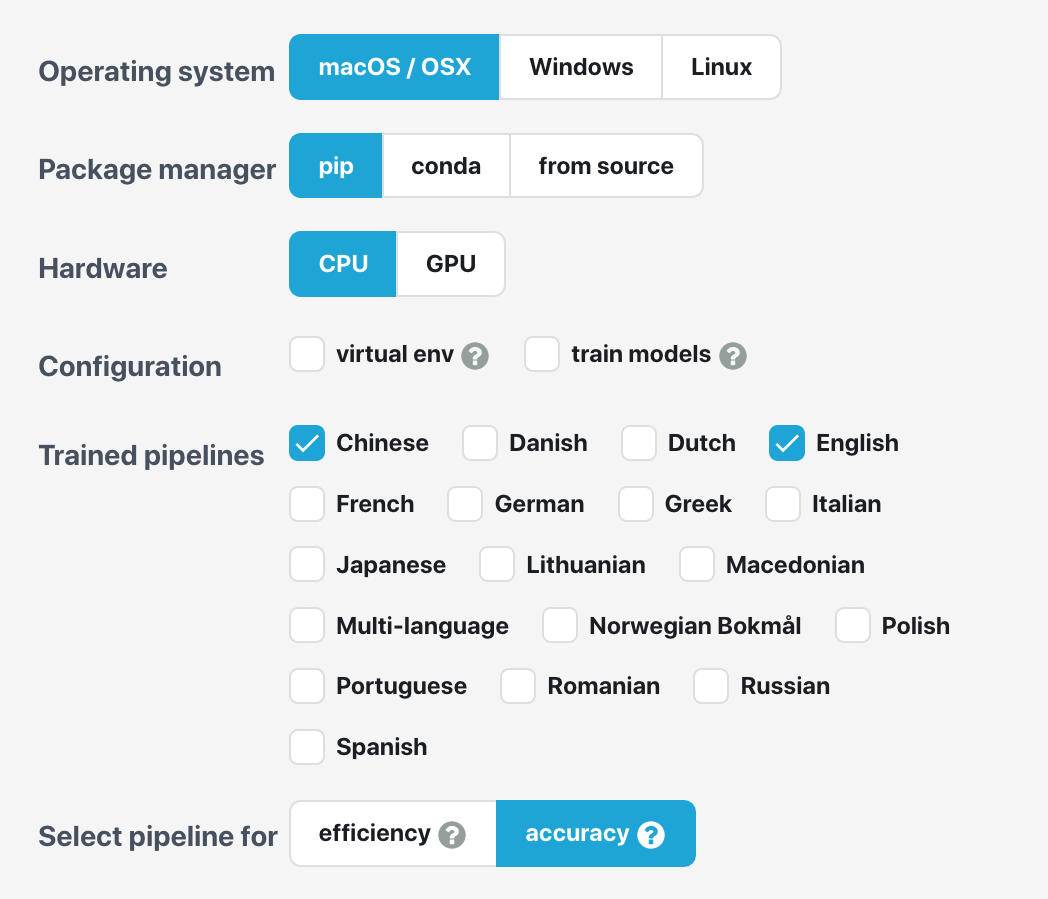

spacy 3+starts to support transformer-based NLP. Please make sure you have the most recent version of the module.

$ pip show spacy

Documentation of

spacy

Installation steps:

$ conda activate python-notes

$ conda install -c conda-forge spacy

$ python -m spacy download zh_core_web_trf

$ python -m spacy download zh_core_web_sm

$ python -m spacy download en_core_web_trf

$ python -m spacy download en_core_web_sm

spacyprovides a good API to help users install the package. Please set up the relevant parameters according to the API and find your own installation codes.

## Colab Only

!python -m spacy download zh_core_web_trf

Collecting zh-core-web-trf==3.7.2

Downloading https://github.com/explosion/spacy-models/releases/download/zh_core_web_trf-3.7.2/zh_core_web_trf-3.7.2-py3-none-any.whl (415.1 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 415.1/415.1 MB 2.6 MB/s eta 0:00:00

?25hRequirement already satisfied: spacy<3.8.0,>=3.7.0 in /usr/local/lib/python3.10/dist-packages (from zh-core-web-trf==3.7.2) (3.7.4)

Collecting spacy-curated-transformers<0.3.0,>=0.2.0 (from zh-core-web-trf==3.7.2)

Downloading spacy_curated_transformers-0.2.2-py2.py3-none-any.whl (236 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 236.3/236.3 kB 2.1 MB/s eta 0:00:00

?25hCollecting spacy-pkuseg<0.1.0,>=0.0.27 (from zh-core-web-trf==3.7.2)

Downloading spacy_pkuseg-0.0.33-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (2.5 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 2.5/2.5 MB 18.7 MB/s eta 0:00:00

?25hRequirement already satisfied: spacy-legacy<3.1.0,>=3.0.11 in /usr/local/lib/python3.10/dist-packages (from spacy<3.8.0,>=3.7.0->zh-core-web-trf==3.7.2) (3.0.12)

Requirement already satisfied: spacy-loggers<2.0.0,>=1.0.0 in /usr/local/lib/python3.10/dist-packages (from spacy<3.8.0,>=3.7.0->zh-core-web-trf==3.7.2) (1.0.5)

Requirement already satisfied: murmurhash<1.1.0,>=0.28.0 in /usr/local/lib/python3.10/dist-packages (from spacy<3.8.0,>=3.7.0->zh-core-web-trf==3.7.2) (1.0.10)

Requirement already satisfied: cymem<2.1.0,>=2.0.2 in /usr/local/lib/python3.10/dist-packages (from spacy<3.8.0,>=3.7.0->zh-core-web-trf==3.7.2) (2.0.8)

Requirement already satisfied: preshed<3.1.0,>=3.0.2 in /usr/local/lib/python3.10/dist-packages (from spacy<3.8.0,>=3.7.0->zh-core-web-trf==3.7.2) (3.0.9)

Requirement already satisfied: thinc<8.3.0,>=8.2.2 in /usr/local/lib/python3.10/dist-packages (from spacy<3.8.0,>=3.7.0->zh-core-web-trf==3.7.2) (8.2.3)

Requirement already satisfied: wasabi<1.2.0,>=0.9.1 in /usr/local/lib/python3.10/dist-packages (from spacy<3.8.0,>=3.7.0->zh-core-web-trf==3.7.2) (1.1.2)

Requirement already satisfied: srsly<3.0.0,>=2.4.3 in /usr/local/lib/python3.10/dist-packages (from spacy<3.8.0,>=3.7.0->zh-core-web-trf==3.7.2) (2.4.8)

Requirement already satisfied: catalogue<2.1.0,>=2.0.6 in /usr/local/lib/python3.10/dist-packages (from spacy<3.8.0,>=3.7.0->zh-core-web-trf==3.7.2) (2.0.10)

Requirement already satisfied: weasel<0.4.0,>=0.1.0 in /usr/local/lib/python3.10/dist-packages (from spacy<3.8.0,>=3.7.0->zh-core-web-trf==3.7.2) (0.3.4)

Requirement already satisfied: typer<0.10.0,>=0.3.0 in /usr/local/lib/python3.10/dist-packages (from spacy<3.8.0,>=3.7.0->zh-core-web-trf==3.7.2) (0.9.0)

Requirement already satisfied: smart-open<7.0.0,>=5.2.1 in /usr/local/lib/python3.10/dist-packages (from spacy<3.8.0,>=3.7.0->zh-core-web-trf==3.7.2) (6.4.0)

Requirement already satisfied: tqdm<5.0.0,>=4.38.0 in /usr/local/lib/python3.10/dist-packages (from spacy<3.8.0,>=3.7.0->zh-core-web-trf==3.7.2) (4.66.2)

Requirement already satisfied: requests<3.0.0,>=2.13.0 in /usr/local/lib/python3.10/dist-packages (from spacy<3.8.0,>=3.7.0->zh-core-web-trf==3.7.2) (2.31.0)

Requirement already satisfied: pydantic!=1.8,!=1.8.1,<3.0.0,>=1.7.4 in /usr/local/lib/python3.10/dist-packages (from spacy<3.8.0,>=3.7.0->zh-core-web-trf==3.7.2) (2.6.1)

Requirement already satisfied: jinja2 in /usr/local/lib/python3.10/dist-packages (from spacy<3.8.0,>=3.7.0->zh-core-web-trf==3.7.2) (3.1.3)

Requirement already satisfied: setuptools in /usr/local/lib/python3.10/dist-packages (from spacy<3.8.0,>=3.7.0->zh-core-web-trf==3.7.2) (67.7.2)

Requirement already satisfied: packaging>=20.0 in /usr/local/lib/python3.10/dist-packages (from spacy<3.8.0,>=3.7.0->zh-core-web-trf==3.7.2) (23.2)

Requirement already satisfied: langcodes<4.0.0,>=3.2.0 in /usr/local/lib/python3.10/dist-packages (from spacy<3.8.0,>=3.7.0->zh-core-web-trf==3.7.2) (3.3.0)

Requirement already satisfied: numpy>=1.19.0 in /usr/local/lib/python3.10/dist-packages (from spacy<3.8.0,>=3.7.0->zh-core-web-trf==3.7.2) (1.25.2)

Collecting curated-transformers<0.2.0,>=0.1.0 (from spacy-curated-transformers<0.3.0,>=0.2.0->zh-core-web-trf==3.7.2)

Downloading curated_transformers-0.1.1-py2.py3-none-any.whl (25 kB)

Collecting curated-tokenizers<0.1.0,>=0.0.9 (from spacy-curated-transformers<0.3.0,>=0.2.0->zh-core-web-trf==3.7.2)

Downloading curated_tokenizers-0.0.9-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (731 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 731.6/731.6 kB 63.9 MB/s eta 0:00:00

?25hRequirement already satisfied: torch>=1.12.0 in /usr/local/lib/python3.10/dist-packages (from spacy-curated-transformers<0.3.0,>=0.2.0->zh-core-web-trf==3.7.2) (2.1.0+cu121)

Requirement already satisfied: regex>=2022 in /usr/local/lib/python3.10/dist-packages (from curated-tokenizers<0.1.0,>=0.0.9->spacy-curated-transformers<0.3.0,>=0.2.0->zh-core-web-trf==3.7.2) (2023.12.25)

Requirement already satisfied: annotated-types>=0.4.0 in /usr/local/lib/python3.10/dist-packages (from pydantic!=1.8,!=1.8.1,<3.0.0,>=1.7.4->spacy<3.8.0,>=3.7.0->zh-core-web-trf==3.7.2) (0.6.0)

Requirement already satisfied: pydantic-core==2.16.2 in /usr/local/lib/python3.10/dist-packages (from pydantic!=1.8,!=1.8.1,<3.0.0,>=1.7.4->spacy<3.8.0,>=3.7.0->zh-core-web-trf==3.7.2) (2.16.2)

Requirement already satisfied: typing-extensions>=4.6.1 in /usr/local/lib/python3.10/dist-packages (from pydantic!=1.8,!=1.8.1,<3.0.0,>=1.7.4->spacy<3.8.0,>=3.7.0->zh-core-web-trf==3.7.2) (4.9.0)

Requirement already satisfied: charset-normalizer<4,>=2 in /usr/local/lib/python3.10/dist-packages (from requests<3.0.0,>=2.13.0->spacy<3.8.0,>=3.7.0->zh-core-web-trf==3.7.2) (3.3.2)

Requirement already satisfied: idna<4,>=2.5 in /usr/local/lib/python3.10/dist-packages (from requests<3.0.0,>=2.13.0->spacy<3.8.0,>=3.7.0->zh-core-web-trf==3.7.2) (3.6)

Requirement already satisfied: urllib3<3,>=1.21.1 in /usr/local/lib/python3.10/dist-packages (from requests<3.0.0,>=2.13.0->spacy<3.8.0,>=3.7.0->zh-core-web-trf==3.7.2) (2.0.7)

Requirement already satisfied: certifi>=2017.4.17 in /usr/local/lib/python3.10/dist-packages (from requests<3.0.0,>=2.13.0->spacy<3.8.0,>=3.7.0->zh-core-web-trf==3.7.2) (2024.2.2)

Requirement already satisfied: blis<0.8.0,>=0.7.8 in /usr/local/lib/python3.10/dist-packages (from thinc<8.3.0,>=8.2.2->spacy<3.8.0,>=3.7.0->zh-core-web-trf==3.7.2) (0.7.11)

Requirement already satisfied: confection<1.0.0,>=0.0.1 in /usr/local/lib/python3.10/dist-packages (from thinc<8.3.0,>=8.2.2->spacy<3.8.0,>=3.7.0->zh-core-web-trf==3.7.2) (0.1.4)

Requirement already satisfied: filelock in /usr/local/lib/python3.10/dist-packages (from torch>=1.12.0->spacy-curated-transformers<0.3.0,>=0.2.0->zh-core-web-trf==3.7.2) (3.13.1)

Requirement already satisfied: sympy in /usr/local/lib/python3.10/dist-packages (from torch>=1.12.0->spacy-curated-transformers<0.3.0,>=0.2.0->zh-core-web-trf==3.7.2) (1.12)

Requirement already satisfied: networkx in /usr/local/lib/python3.10/dist-packages (from torch>=1.12.0->spacy-curated-transformers<0.3.0,>=0.2.0->zh-core-web-trf==3.7.2) (3.2.1)

Requirement already satisfied: fsspec in /usr/local/lib/python3.10/dist-packages (from torch>=1.12.0->spacy-curated-transformers<0.3.0,>=0.2.0->zh-core-web-trf==3.7.2) (2023.6.0)

Requirement already satisfied: triton==2.1.0 in /usr/local/lib/python3.10/dist-packages (from torch>=1.12.0->spacy-curated-transformers<0.3.0,>=0.2.0->zh-core-web-trf==3.7.2) (2.1.0)

Requirement already satisfied: click<9.0.0,>=7.1.1 in /usr/local/lib/python3.10/dist-packages (from typer<0.10.0,>=0.3.0->spacy<3.8.0,>=3.7.0->zh-core-web-trf==3.7.2) (8.1.7)

Requirement already satisfied: cloudpathlib<0.17.0,>=0.7.0 in /usr/local/lib/python3.10/dist-packages (from weasel<0.4.0,>=0.1.0->spacy<3.8.0,>=3.7.0->zh-core-web-trf==3.7.2) (0.16.0)

Requirement already satisfied: MarkupSafe>=2.0 in /usr/local/lib/python3.10/dist-packages (from jinja2->spacy<3.8.0,>=3.7.0->zh-core-web-trf==3.7.2) (2.1.5)

Requirement already satisfied: mpmath>=0.19 in /usr/local/lib/python3.10/dist-packages (from sympy->torch>=1.12.0->spacy-curated-transformers<0.3.0,>=0.2.0->zh-core-web-trf==3.7.2) (1.3.0)

Installing collected packages: curated-tokenizers, spacy-pkuseg, curated-transformers, spacy-curated-transformers, zh-core-web-trf

Successfully installed curated-tokenizers-0.0.9 curated-transformers-0.1.1 spacy-curated-transformers-0.2.2 spacy-pkuseg-0.0.33 zh-core-web-trf-3.7.2

✔ Download and installation successful

You can now load the package via spacy.load('zh_core_web_trf')

⚠ Restart to reload dependencies

If you are in a Jupyter or Colab notebook, you may need to restart Python in

order to load all the package's dependencies. You can do this by selecting the

'Restart kernel' or 'Restart runtime' option.

import spacy

from spacy import displacy

# load language model

nlp_zh = spacy.load('zh_core_web_trf') ## disable=["parser"]

# parse text

doc = nlp_zh('這是一個中文的句子')

Note

For more information on POS tags, see spaCy POS tag scheme documentation.

# parts of speech tagging

for token in doc:

print(((

token.text,

token.pos_,

token.tag_,

token.dep_,

token.is_alpha,

token.is_stop,

)))

('這', 'PRON', 'PN', 'nsubj', True, False)

('是', 'VERB', 'VC', 'cop', True, True)

('一', 'NUM', 'CD', 'nummod', True, True)

('個', 'NUM', 'M', 'mark:clf', True, False)

('中文', 'NOUN', 'NN', 'nmod:assmod', True, False)

('的', 'PART', 'DEG', 'case', True, True)

('句子', 'NOUN', 'NN', 'ROOT', True, False)

' | '.join([token.text + "_" + token.tag_ for token in doc])

'這_PN | 是_VC | 一_CD | 個_M | 中文_NN | 的_DEG | 句子_NN'

## Check meaning of a POS tag

spacy.explain('NN')

'noun, singular or mass'

Visualizing linguistic features#

# Visualize

displacy.render(doc, style="dep")

options = {

"compact": True,

"bg": "#09a3d5",

"color": "white",

"font": "Source Sans Pro",

"distance": 120

}

displacy.render(doc, style="dep", options=options)

To process multiple documents of a large corpus, it would be more efficient to work on them on batches of texts.

spaCy’s

nlp.pipe()method takes aniterableof texts and yields processedDocobjects. (That is,nlp.pipe()returns agenerator.)Use the

disablekeyword argument to disable components we don’t need – either when loading a pipeline, or during processing withnlp.pipe. This is more efficient in computing.Please read spaCy’s documentation on preprocessing.

doc2 = nlp_zh.pipe(text)

for d in doc2:

print(' | '.join([token.text + "_" + token.tag_ for token in d]) + '\n')

高速_JJ | 公路_NN | 局說_NN | ,_PU | 目前_NT | 在_P | 國道_NN | 3_OD | 號_NN | 北向_NN | 水上_NN | 系統_NN | 至_CC | 中埔_NR | 路段_NN | 車多_NN | 壅塞_VA | ,_PU | 已_AD | 回_VV | 堵約_VV | 3_CD | 公里_M | 。_PU | 另外_AD | ,_PU | 國道_NN | 1_OD | 號_NN | 北向_VV | 仁德_NR | 至_CC | 永康_NR | 路段_NN | 路段_NN | ,_PU | 已_AD | 回_VV | 堵約_VV | 有_VE | 7_CD | 公里_M | 。_PU | 建議_VV | 駕駛人_NN | 提前_AD | 避開_VV | 壅塞_JJ | 路段_NN | 改道_VV | 行駛_NN | ,_PU | 行經_VV | 車_NN | 多_VA | 路段_NN | 請_VV | 保持_VV | 行車_NN | 安全_JJ | 距離_NN | ,_PU | 小心_AD | 行駛_VV | 。_PU

國道_NN | 車多_NN | 壅塞_JJ | 路段_NN | 還_AD | 有_VE | 國_NN | 1內湖_NR | -_PU | 五_NR | 堵北_NR | 向_P | 路段_NN | 、_PU | 楊梅_NR | -_PU | 新竹_NR | 南向_JJ | 路段_NN | ;_PU | 國3三_NR | 鶯_NR | -_PU | 關西_NR | 服務_NN | 區南_NN | 向_P | 路段_NN | 、_PU | 快官_NN | -_PU | 霧峰_NR | 南向_NN | 路段_NN | 、_PU | 水上_NR | 系統_NN | -_PU | 中_NR | 埔北_NR | 向_P | 路段_NN | ;_PU | 國6_NN | 霧峰_NR | 系統_NN | -_PU | 東草_NR | 屯東_NR | 向_P | 路段_NN | 、_PU | 國10_NN | 燕巢_NR | -_PU | 燕巢_NR | 系_NN | 統東_NN | 向_P | 路段_NN | 。_PU

Tip

Please read the documentation of spacy very carefully for additional ways to extract other useful linguistic properties.

You may need that for the assignments.

Conclusion#

Different segmenters have very different behaviors.

The choice of a proper segmenter may boil down to the following crucial questions:

How do we evaluate the segmenter’s performance?

What is the objective of getting the word boundaries?

What is a word in Chinese?