Neural Language Model: A Start#

In this tutorial, we will look at a naive example of neural language model.

Given a corpus, we can build a neural language model, which will learn to predict the next word given a specified limited context.

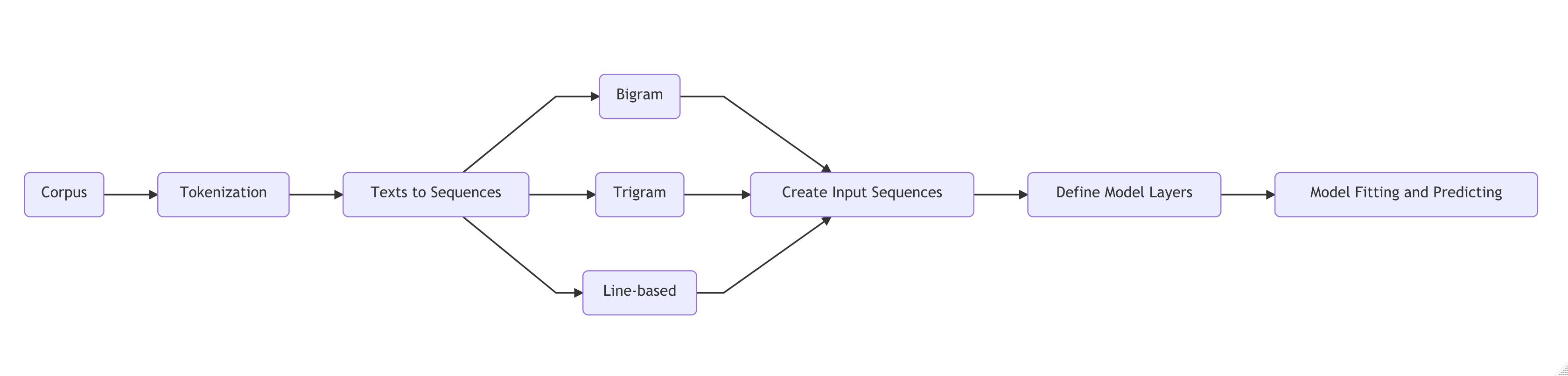

Depending on the size of the limited context, we can implement different types of neural language model:

Bigram-based neural language model: The model uses one preceding word for the next-word prediction.

Trigram-based neural language model: The model uses two preceding words for the next-word prediction.

Line-based neural language model: The model uses all the existing fore-going words in the “sequence” for the next-word prediction.

Discourse-based neural language model: The model uses inter-sentential information for next-word prediction (e.g., BERT).

This tutorial will demonstarte how to build a bigram-based language model.

In the Assignments, you need to extend the same rationale to other types of language models.

Workflow of Neural Language Model#

See also

We frequently encounter the need to train models on large datasets, which can be memory-intensive and difficult to load entirely into the local machine’s memory at once. Therefore, it’s essential to master techniques for optimizing the data loading process during model training.

Tensorflow provides an effective Dataset API for this. Using TensorFlow Dataset API involves several steps to create and manipulate datasets efficiently. Here’s a step-by-step guide:

Import TensorFlow: Make sure you have TensorFlow installed and import it into your Python script or notebook.

import tensorflow as tf

Create Dataset from Data: There are different ways to create a dataset, such as from a list, NumPy array, tensors, or files.

# From a list dataset = tf.data.Dataset.from_tensor_slices([1, 2, 3, 4, 5]) # From a NumPy array import numpy as np arr = np.array([1, 2, 3, 4, 5]) dataset = tf.data.Dataset.from_tensor_slices(arr) # From tensors tensor1 = tf.constant([1, 2, 3]) tensor2 = tf.constant([4, 5, 6]) dataset = tf.data.Dataset.from_tensors((tensor1, tensor2)) # From files file_paths = ['file1.txt', 'file2.txt'] dataset = tf.data.TextLineDataset(file_paths)

Transformations: Apply transformations to the dataset using methods like

map(),filter(),batch(),shuffle(), etc.# Map a function to each element dataset = dataset.map(lambda x: x * 2) # Filter elements dataset = dataset.filter(lambda x: x > 0) # Batch elements batched_dataset = dataset.batch(32) # Shuffle elements shuffled_dataset = dataset.shuffle(buffer_size=1000) # Repeat dataset repeated_dataset = dataset.repeat(count=3)

Iterate Over the Dataset: Use a

forloop or iterator to iterate over the dataset and process the elements.for item in dataset: print(item) # If you need to batch the dataset first for batch in batched_dataset: print(batch)

Use in Model Training: Pass the dataset directly to the model’s

fit()method for training.model.fit(dataset, epochs=10)

Performance Optimization: For better performance, consider using prefetching, caching, and parallelism.

dataset = dataset.prefetch(buffer_size=tf.data.experimental.AUTOTUNE) dataset = dataset.cache() dataset = dataset.prefetch(tf.data.experimental.AUTOTUNE)

Handling Complex Data: For more complex data processing, you can use

tf.py_functionto apply Python functions to each element of the dataset.def my_function(x): # Your custom Python code here return x * 2 dataset = dataset.map(lambda x: tf.py_function(my_function, [x], tf.int32))

These are the basic steps to use TensorFlow Dataset API effectively. Adjustments can be made based on the specific requirements of your project and the characteristics of your data.

Bigram Model#

A bigram-based language model assumes that the next word (to be predicted) depends only on one preceding word.

## Dependencies

import numpy as np

import tensorflow.keras as keras

from tensorflow.keras.preprocessing.text import Tokenizer

from tensorflow.keras.utils import to_categorical, plot_model

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, LSTM, Embedding

Tokenization#

A quick reminder of important parameters for

Tokenzier():num_words: the maximum number of words to keep, based on word frequency. Only the most commonnum_words-1words will be kept.filters: a string where each element is a character that will be filtered from the texts. The default includes all punctuations, plus tabs and line breaks (except for the'character).lower: boolean. Whether to convert the texts to lowercase.split: string. Separator for word splitting.char_level: if True, every character will be treated as a token.oov_token: if given, it will be added toword_indexand used to replace out-of-vocabulary words duringtext_to_sequencecalls

# source text

data = """ Jack and Jill went up the hill\n

To fetch a pail of water\n

Jack fell down and broke his crown\n

And Jill came tumbling after\n """

data = [l.strip() for l in data.split('\n') if l != ""]

# integer encode text

tokenizer = Tokenizer()

tokenizer.fit_on_texts(data)

# now the data consists of a sequence of word index integers

encoded = tokenizer.texts_to_sequences(data)

# determine the vocabulary size

vocab_size = len(tokenizer.word_index) + 1

print('Vocabulary Size: %d' % vocab_size)

print(tokenizer.word_index)

Vocabulary Size: 22

{'and': 1, 'jack': 2, 'jill': 3, 'went': 4, 'up': 5, 'the': 6, 'hill': 7, 'to': 8, 'fetch': 9, 'a': 10, 'pail': 11, 'of': 12, 'water': 13, 'fell': 14, 'down': 15, 'broke': 16, 'his': 17, 'crown': 18, 'came': 19, 'tumbling': 20, 'after': 21}

Text-to-Sequences and Training-Testing Sets#

Principles for bigrams extraction

When we create bigrams as the input sequences for network training, we need to make sure that we do not include unmeaningful bigrams, such as bigrams spanning the text boundaries, or sentence boundaries.

# create bigrams sequences

## bigrams holder

sequences = list()

## Extract bigrams from each text

for e in encoded:

for i in range(1, len(e)):

sequence = e[i - 1:i + 1]

sequences.append(sequence)

print('Total Sequences: %d' % len(sequences))

sequences = np.array(sequences)

Total Sequences: 21

sequences[:5]

array([[2, 1],

[1, 3],

[3, 4],

[4, 5],

[5, 6]])

A sequence contains both our input and also output of the network.

That is, for bigram-based LM, the first word is the input X and the second word is the expected output y.

# split into X and y elements

X, y = sequences[:, 0], sequences[:, 1]

print(sequences[:5])

print(X[:5])

print(y[:5])

[[2 1]

[1 3]

[3 4]

[4 5]

[5 6]]

[2 1 3 4 5]

[1 3 4 5 6]

One-hot Representation of the Next-Word#

Because the neural language model is going to be a multi-class classifier (for word prediction), we need to convert our

yinto one-hot encoding.

# one hot encode outputs

y = to_categorical(y, num_classes=vocab_size)

y.shape

(21, 22)

print(y[:5])

[[0. 1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[0. 0. 0. 1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[0. 0. 0. 0. 1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[0. 0. 0. 0. 0. 1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]

[0. 0. 0. 0. 0. 0. 1. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.]]

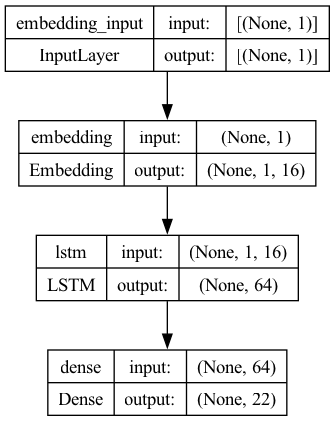

Define RNN Language Model#

# define model

model = Sequential()

model.add(Embedding(input_dim=vocab_size, output_dim=16, input_length=1))

model.add(LSTM(64)) # LSTM Complexity

model.add(Dense(vocab_size, activation='softmax'))

print(model.summary())

Show code cell output

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

embedding (Embedding) (None, 1, 16) 352

lstm (LSTM) (None, 64) 20736

dense (Dense) (None, 22) 1430

=================================================================

Total params: 22518 (87.96 KB)

Trainable params: 22518 (87.96 KB)

Non-trainable params: 0 (0.00 Byte)

_________________________________________________________________

None

# compile network

model.compile(loss='categorical_crossentropy',

optimizer='adam',

metrics=['accuracy'])

# fit network

model.fit(X, y, epochs=500, verbose=2)

Show code cell output

Epoch 1/500

1/1 - 1s - loss: 3.0912 - accuracy: 0.0000e+00 - 559ms/epoch - 559ms/step

Epoch 2/500

1/1 - 0s - loss: 3.0901 - accuracy: 0.0000e+00 - 2ms/epoch - 2ms/step

Epoch 3/500

1/1 - 0s - loss: 3.0890 - accuracy: 0.0000e+00 - 2ms/epoch - 2ms/step

Epoch 4/500

1/1 - 0s - loss: 3.0879 - accuracy: 0.0952 - 2ms/epoch - 2ms/step

Epoch 5/500

1/1 - 0s - loss: 3.0868 - accuracy: 0.0952 - 2ms/epoch - 2ms/step

Epoch 6/500

1/1 - 0s - loss: 3.0857 - accuracy: 0.1429 - 2ms/epoch - 2ms/step

Epoch 7/500

1/1 - 0s - loss: 3.0846 - accuracy: 0.2857 - 2ms/epoch - 2ms/step

Epoch 8/500

1/1 - 0s - loss: 3.0835 - accuracy: 0.3333 - 2ms/epoch - 2ms/step

Epoch 9/500

1/1 - 0s - loss: 3.0823 - accuracy: 0.4286 - 2ms/epoch - 2ms/step

Epoch 10/500

1/1 - 0s - loss: 3.0812 - accuracy: 0.4286 - 2ms/epoch - 2ms/step

Epoch 11/500

1/1 - 0s - loss: 3.0800 - accuracy: 0.3810 - 2ms/epoch - 2ms/step

Epoch 12/500

1/1 - 0s - loss: 3.0788 - accuracy: 0.3810 - 2ms/epoch - 2ms/step

Epoch 13/500

1/1 - 0s - loss: 3.0776 - accuracy: 0.4286 - 2ms/epoch - 2ms/step

Epoch 14/500

1/1 - 0s - loss: 3.0764 - accuracy: 0.4286 - 2ms/epoch - 2ms/step

Epoch 15/500

1/1 - 0s - loss: 3.0751 - accuracy: 0.4286 - 2ms/epoch - 2ms/step

Epoch 16/500

1/1 - 0s - loss: 3.0738 - accuracy: 0.4762 - 2ms/epoch - 2ms/step

Epoch 17/500

1/1 - 0s - loss: 3.0725 - accuracy: 0.4762 - 2ms/epoch - 2ms/step

Epoch 18/500

1/1 - 0s - loss: 3.0711 - accuracy: 0.5238 - 2ms/epoch - 2ms/step

Epoch 19/500

1/1 - 0s - loss: 3.0697 - accuracy: 0.5238 - 2ms/epoch - 2ms/step

Epoch 20/500

1/1 - 0s - loss: 3.0683 - accuracy: 0.5714 - 2ms/epoch - 2ms/step

Epoch 21/500

1/1 - 0s - loss: 3.0668 - accuracy: 0.5714 - 2ms/epoch - 2ms/step

Epoch 22/500

1/1 - 0s - loss: 3.0653 - accuracy: 0.5714 - 2ms/epoch - 2ms/step

Epoch 23/500

1/1 - 0s - loss: 3.0637 - accuracy: 0.5714 - 2ms/epoch - 2ms/step

Epoch 24/500

1/1 - 0s - loss: 3.0621 - accuracy: 0.5714 - 2ms/epoch - 2ms/step

Epoch 25/500

1/1 - 0s - loss: 3.0605 - accuracy: 0.5714 - 2ms/epoch - 2ms/step

Epoch 26/500

1/1 - 0s - loss: 3.0588 - accuracy: 0.5714 - 2ms/epoch - 2ms/step

Epoch 27/500

1/1 - 0s - loss: 3.0570 - accuracy: 0.5714 - 2ms/epoch - 2ms/step

Epoch 28/500

1/1 - 0s - loss: 3.0552 - accuracy: 0.5714 - 5ms/epoch - 5ms/step

Epoch 29/500

1/1 - 0s - loss: 3.0533 - accuracy: 0.5714 - 3ms/epoch - 3ms/step

Epoch 30/500

1/1 - 0s - loss: 3.0514 - accuracy: 0.5714 - 4ms/epoch - 4ms/step

Epoch 31/500

1/1 - 0s - loss: 3.0494 - accuracy: 0.4762 - 3ms/epoch - 3ms/step

Epoch 32/500

1/1 - 0s - loss: 3.0473 - accuracy: 0.4762 - 4ms/epoch - 4ms/step

Epoch 33/500

1/1 - 0s - loss: 3.0452 - accuracy: 0.4762 - 4ms/epoch - 4ms/step

Epoch 34/500

1/1 - 0s - loss: 3.0430 - accuracy: 0.4762 - 2ms/epoch - 2ms/step

Epoch 35/500

1/1 - 0s - loss: 3.0407 - accuracy: 0.4762 - 4ms/epoch - 4ms/step

Epoch 36/500

1/1 - 0s - loss: 3.0383 - accuracy: 0.4762 - 2ms/epoch - 2ms/step

Epoch 37/500

1/1 - 0s - loss: 3.0359 - accuracy: 0.4762 - 2ms/epoch - 2ms/step

Epoch 38/500

1/1 - 0s - loss: 3.0334 - accuracy: 0.4762 - 2ms/epoch - 2ms/step

Epoch 39/500

1/1 - 0s - loss: 3.0308 - accuracy: 0.4762 - 2ms/epoch - 2ms/step

Epoch 40/500

1/1 - 0s - loss: 3.0281 - accuracy: 0.4762 - 2ms/epoch - 2ms/step

Epoch 41/500

1/1 - 0s - loss: 3.0253 - accuracy: 0.4762 - 2ms/epoch - 2ms/step

Epoch 42/500

1/1 - 0s - loss: 3.0224 - accuracy: 0.4762 - 2ms/epoch - 2ms/step

Epoch 43/500

1/1 - 0s - loss: 3.0194 - accuracy: 0.4762 - 2ms/epoch - 2ms/step

Epoch 44/500

1/1 - 0s - loss: 3.0163 - accuracy: 0.4762 - 1ms/epoch - 1ms/step

Epoch 45/500

1/1 - 0s - loss: 3.0131 - accuracy: 0.4762 - 2ms/epoch - 2ms/step

Epoch 46/500

1/1 - 0s - loss: 3.0099 - accuracy: 0.4762 - 1ms/epoch - 1ms/step

Epoch 47/500

1/1 - 0s - loss: 3.0065 - accuracy: 0.4762 - 1ms/epoch - 1ms/step

Epoch 48/500

1/1 - 0s - loss: 3.0029 - accuracy: 0.4762 - 2ms/epoch - 2ms/step

Epoch 49/500

1/1 - 0s - loss: 2.9993 - accuracy: 0.4762 - 2ms/epoch - 2ms/step

Epoch 50/500

1/1 - 0s - loss: 2.9955 - accuracy: 0.4762 - 2ms/epoch - 2ms/step

Epoch 51/500

1/1 - 0s - loss: 2.9917 - accuracy: 0.4762 - 2ms/epoch - 2ms/step

Epoch 52/500

1/1 - 0s - loss: 2.9876 - accuracy: 0.4762 - 2ms/epoch - 2ms/step

Epoch 53/500

1/1 - 0s - loss: 2.9835 - accuracy: 0.4762 - 2ms/epoch - 2ms/step

Epoch 54/500

1/1 - 0s - loss: 2.9792 - accuracy: 0.4762 - 2ms/epoch - 2ms/step

Epoch 55/500

1/1 - 0s - loss: 2.9748 - accuracy: 0.4762 - 2ms/epoch - 2ms/step

Epoch 56/500

1/1 - 0s - loss: 2.9702 - accuracy: 0.4762 - 2ms/epoch - 2ms/step

Epoch 57/500

1/1 - 0s - loss: 2.9655 - accuracy: 0.4762 - 2ms/epoch - 2ms/step

Epoch 58/500

1/1 - 0s - loss: 2.9606 - accuracy: 0.4762 - 2ms/epoch - 2ms/step

Epoch 59/500

1/1 - 0s - loss: 2.9556 - accuracy: 0.4762 - 2ms/epoch - 2ms/step

Epoch 60/500

1/1 - 0s - loss: 2.9504 - accuracy: 0.4762 - 2ms/epoch - 2ms/step

Epoch 61/500

1/1 - 0s - loss: 2.9450 - accuracy: 0.4762 - 2ms/epoch - 2ms/step

Epoch 62/500

1/1 - 0s - loss: 2.9395 - accuracy: 0.4762 - 2ms/epoch - 2ms/step

Epoch 63/500

1/1 - 0s - loss: 2.9338 - accuracy: 0.4762 - 1ms/epoch - 1ms/step

Epoch 64/500

1/1 - 0s - loss: 2.9279 - accuracy: 0.5238 - 2ms/epoch - 2ms/step

Epoch 65/500

1/1 - 0s - loss: 2.9218 - accuracy: 0.5238 - 2ms/epoch - 2ms/step

Epoch 66/500

1/1 - 0s - loss: 2.9155 - accuracy: 0.5238 - 1ms/epoch - 1ms/step

Epoch 67/500

1/1 - 0s - loss: 2.9091 - accuracy: 0.5238 - 1ms/epoch - 1ms/step

Epoch 68/500

1/1 - 0s - loss: 2.9024 - accuracy: 0.5238 - 2ms/epoch - 2ms/step

Epoch 69/500

1/1 - 0s - loss: 2.8956 - accuracy: 0.5238 - 2ms/epoch - 2ms/step

Epoch 70/500

1/1 - 0s - loss: 2.8885 - accuracy: 0.5238 - 2ms/epoch - 2ms/step

Epoch 71/500

1/1 - 0s - loss: 2.8812 - accuracy: 0.5238 - 2ms/epoch - 2ms/step

Epoch 72/500

1/1 - 0s - loss: 2.8737 - accuracy: 0.5238 - 1ms/epoch - 1ms/step

Epoch 73/500

1/1 - 0s - loss: 2.8660 - accuracy: 0.5238 - 2ms/epoch - 2ms/step

Epoch 74/500

1/1 - 0s - loss: 2.8580 - accuracy: 0.5238 - 2ms/epoch - 2ms/step

Epoch 75/500

1/1 - 0s - loss: 2.8498 - accuracy: 0.5238 - 2ms/epoch - 2ms/step

Epoch 76/500

1/1 - 0s - loss: 2.8414 - accuracy: 0.5238 - 2ms/epoch - 2ms/step

Epoch 77/500

1/1 - 0s - loss: 2.8328 - accuracy: 0.5238 - 2ms/epoch - 2ms/step

Epoch 78/500

1/1 - 0s - loss: 2.8239 - accuracy: 0.5714 - 2ms/epoch - 2ms/step

Epoch 79/500

1/1 - 0s - loss: 2.8147 - accuracy: 0.5714 - 2ms/epoch - 2ms/step

Epoch 80/500

1/1 - 0s - loss: 2.8053 - accuracy: 0.5714 - 2ms/epoch - 2ms/step

Epoch 81/500

1/1 - 0s - loss: 2.7956 - accuracy: 0.5714 - 2ms/epoch - 2ms/step

Epoch 82/500

1/1 - 0s - loss: 2.7857 - accuracy: 0.5714 - 2ms/epoch - 2ms/step

Epoch 83/500

1/1 - 0s - loss: 2.7755 - accuracy: 0.5714 - 2ms/epoch - 2ms/step

Epoch 84/500

1/1 - 0s - loss: 2.7650 - accuracy: 0.5714 - 2ms/epoch - 2ms/step

Epoch 85/500

1/1 - 0s - loss: 2.7543 - accuracy: 0.6190 - 2ms/epoch - 2ms/step

Epoch 86/500

1/1 - 0s - loss: 2.7433 - accuracy: 0.6190 - 2ms/epoch - 2ms/step

Epoch 87/500

1/1 - 0s - loss: 2.7320 - accuracy: 0.6190 - 3ms/epoch - 3ms/step

Epoch 88/500

1/1 - 0s - loss: 2.7204 - accuracy: 0.6190 - 3ms/epoch - 3ms/step

Epoch 89/500

1/1 - 0s - loss: 2.7086 - accuracy: 0.6190 - 4ms/epoch - 4ms/step

Epoch 90/500

1/1 - 0s - loss: 2.6965 - accuracy: 0.6190 - 3ms/epoch - 3ms/step

Epoch 91/500

1/1 - 0s - loss: 2.6840 - accuracy: 0.6190 - 2ms/epoch - 2ms/step

Epoch 92/500

1/1 - 0s - loss: 2.6713 - accuracy: 0.6190 - 2ms/epoch - 2ms/step

Epoch 93/500

1/1 - 0s - loss: 2.6584 - accuracy: 0.6190 - 3ms/epoch - 3ms/step

Epoch 94/500

1/1 - 0s - loss: 2.6451 - accuracy: 0.6190 - 2ms/epoch - 2ms/step

Epoch 95/500

1/1 - 0s - loss: 2.6315 - accuracy: 0.6190 - 1ms/epoch - 1ms/step

Epoch 96/500

1/1 - 0s - loss: 2.6177 - accuracy: 0.6190 - 1ms/epoch - 1ms/step

Epoch 97/500

1/1 - 0s - loss: 2.6036 - accuracy: 0.6190 - 2ms/epoch - 2ms/step

Epoch 98/500

1/1 - 0s - loss: 2.5892 - accuracy: 0.6190 - 1ms/epoch - 1ms/step

Epoch 99/500

1/1 - 0s - loss: 2.5745 - accuracy: 0.6190 - 2ms/epoch - 2ms/step

Epoch 100/500

1/1 - 0s - loss: 2.5595 - accuracy: 0.6190 - 2ms/epoch - 2ms/step

Epoch 101/500

1/1 - 0s - loss: 2.5443 - accuracy: 0.6190 - 1ms/epoch - 1ms/step

Epoch 102/500

1/1 - 0s - loss: 2.5288 - accuracy: 0.6190 - 1ms/epoch - 1ms/step

Epoch 103/500

1/1 - 0s - loss: 2.5130 - accuracy: 0.6190 - 2ms/epoch - 2ms/step

Epoch 104/500

1/1 - 0s - loss: 2.4970 - accuracy: 0.6190 - 2ms/epoch - 2ms/step

Epoch 105/500

1/1 - 0s - loss: 2.4807 - accuracy: 0.6190 - 2ms/epoch - 2ms/step

Epoch 106/500

1/1 - 0s - loss: 2.4642 - accuracy: 0.6190 - 2ms/epoch - 2ms/step

Epoch 107/500

1/1 - 0s - loss: 2.4475 - accuracy: 0.6190 - 2ms/epoch - 2ms/step

Epoch 108/500

1/1 - 0s - loss: 2.4305 - accuracy: 0.6190 - 2ms/epoch - 2ms/step

Epoch 109/500

1/1 - 0s - loss: 2.4133 - accuracy: 0.6190 - 1ms/epoch - 1ms/step

Epoch 110/500

1/1 - 0s - loss: 2.3958 - accuracy: 0.6190 - 1ms/epoch - 1ms/step

Epoch 111/500

1/1 - 0s - loss: 2.3782 - accuracy: 0.6190 - 1ms/epoch - 1ms/step

Epoch 112/500

1/1 - 0s - loss: 2.3604 - accuracy: 0.6190 - 1ms/epoch - 1ms/step

Epoch 113/500

1/1 - 0s - loss: 2.3424 - accuracy: 0.6190 - 1ms/epoch - 1ms/step

Epoch 114/500

1/1 - 0s - loss: 2.3242 - accuracy: 0.6190 - 1ms/epoch - 1ms/step

Epoch 115/500

1/1 - 0s - loss: 2.3058 - accuracy: 0.6190 - 2ms/epoch - 2ms/step

Epoch 116/500

1/1 - 0s - loss: 2.2873 - accuracy: 0.6667 - 1ms/epoch - 1ms/step

Epoch 117/500

1/1 - 0s - loss: 2.2686 - accuracy: 0.6667 - 1ms/epoch - 1ms/step

Epoch 118/500

1/1 - 0s - loss: 2.2498 - accuracy: 0.6667 - 1ms/epoch - 1ms/step

Epoch 119/500

1/1 - 0s - loss: 2.2309 - accuracy: 0.6667 - 1ms/epoch - 1ms/step

Epoch 120/500

1/1 - 0s - loss: 2.2119 - accuracy: 0.6667 - 1ms/epoch - 1ms/step

Epoch 121/500

1/1 - 0s - loss: 2.1928 - accuracy: 0.6667 - 1ms/epoch - 1ms/step

Epoch 122/500

1/1 - 0s - loss: 2.1735 - accuracy: 0.6667 - 2ms/epoch - 2ms/step

Epoch 123/500

1/1 - 0s - loss: 2.1542 - accuracy: 0.6667 - 2ms/epoch - 2ms/step

Epoch 124/500

1/1 - 0s - loss: 2.1349 - accuracy: 0.6667 - 2ms/epoch - 2ms/step

Epoch 125/500

1/1 - 0s - loss: 2.1154 - accuracy: 0.6667 - 1ms/epoch - 1ms/step

Epoch 126/500

1/1 - 0s - loss: 2.0959 - accuracy: 0.6667 - 2ms/epoch - 2ms/step

Epoch 127/500

1/1 - 0s - loss: 2.0764 - accuracy: 0.6667 - 1ms/epoch - 1ms/step

Epoch 128/500

1/1 - 0s - loss: 2.0569 - accuracy: 0.6667 - 1ms/epoch - 1ms/step

Epoch 129/500

1/1 - 0s - loss: 2.0373 - accuracy: 0.6667 - 1ms/epoch - 1ms/step

Epoch 130/500

1/1 - 0s - loss: 2.0177 - accuracy: 0.6667 - 1ms/epoch - 1ms/step

Epoch 131/500

1/1 - 0s - loss: 1.9981 - accuracy: 0.6667 - 2ms/epoch - 2ms/step

Epoch 132/500

1/1 - 0s - loss: 1.9786 - accuracy: 0.6667 - 1ms/epoch - 1ms/step

Epoch 133/500

1/1 - 0s - loss: 1.9590 - accuracy: 0.6667 - 2ms/epoch - 2ms/step

Epoch 134/500

1/1 - 0s - loss: 1.9394 - accuracy: 0.6667 - 1ms/epoch - 1ms/step

Epoch 135/500

1/1 - 0s - loss: 1.9199 - accuracy: 0.6667 - 1ms/epoch - 1ms/step

Epoch 136/500

1/1 - 0s - loss: 1.9003 - accuracy: 0.6667 - 1ms/epoch - 1ms/step

Epoch 137/500

1/1 - 0s - loss: 1.8809 - accuracy: 0.6667 - 1ms/epoch - 1ms/step

Epoch 138/500

1/1 - 0s - loss: 1.8614 - accuracy: 0.7619 - 2ms/epoch - 2ms/step

Epoch 139/500

1/1 - 0s - loss: 1.8420 - accuracy: 0.7619 - 1ms/epoch - 1ms/step

Epoch 140/500

1/1 - 0s - loss: 1.8226 - accuracy: 0.7619 - 2ms/epoch - 2ms/step

Epoch 141/500

1/1 - 0s - loss: 1.8032 - accuracy: 0.7619 - 1ms/epoch - 1ms/step

Epoch 142/500

1/1 - 0s - loss: 1.7839 - accuracy: 0.8095 - 1ms/epoch - 1ms/step

Epoch 143/500

1/1 - 0s - loss: 1.7647 - accuracy: 0.8095 - 4ms/epoch - 4ms/step

Epoch 144/500

1/1 - 0s - loss: 1.7455 - accuracy: 0.8095 - 3ms/epoch - 3ms/step

Epoch 145/500

1/1 - 0s - loss: 1.7263 - accuracy: 0.8095 - 2ms/epoch - 2ms/step

Epoch 146/500

1/1 - 0s - loss: 1.7072 - accuracy: 0.8095 - 3ms/epoch - 3ms/step

Epoch 147/500

1/1 - 0s - loss: 1.6882 - accuracy: 0.8095 - 3ms/epoch - 3ms/step

Epoch 148/500

1/1 - 0s - loss: 1.6691 - accuracy: 0.8095 - 2ms/epoch - 2ms/step

Epoch 149/500

1/1 - 0s - loss: 1.6502 - accuracy: 0.8095 - 2ms/epoch - 2ms/step

Epoch 150/500

1/1 - 0s - loss: 1.6313 - accuracy: 0.8095 - 3ms/epoch - 3ms/step

Epoch 151/500

1/1 - 0s - loss: 1.6124 - accuracy: 0.8095 - 2ms/epoch - 2ms/step

Epoch 152/500

1/1 - 0s - loss: 1.5936 - accuracy: 0.8095 - 2ms/epoch - 2ms/step

Epoch 153/500

1/1 - 0s - loss: 1.5749 - accuracy: 0.8095 - 2ms/epoch - 2ms/step

Epoch 154/500

1/1 - 0s - loss: 1.5562 - accuracy: 0.8095 - 1ms/epoch - 1ms/step

Epoch 155/500

1/1 - 0s - loss: 1.5376 - accuracy: 0.8095 - 2ms/epoch - 2ms/step

Epoch 156/500

1/1 - 0s - loss: 1.5190 - accuracy: 0.8095 - 1ms/epoch - 1ms/step

Epoch 157/500

1/1 - 0s - loss: 1.5005 - accuracy: 0.8095 - 1ms/epoch - 1ms/step

Epoch 158/500

1/1 - 0s - loss: 1.4821 - accuracy: 0.8095 - 1ms/epoch - 1ms/step

Epoch 159/500

1/1 - 0s - loss: 1.4637 - accuracy: 0.8095 - 1ms/epoch - 1ms/step

Epoch 160/500

1/1 - 0s - loss: 1.4454 - accuracy: 0.8095 - 2ms/epoch - 2ms/step

Epoch 161/500

1/1 - 0s - loss: 1.4271 - accuracy: 0.8095 - 2ms/epoch - 2ms/step

Epoch 162/500

1/1 - 0s - loss: 1.4090 - accuracy: 0.8095 - 2ms/epoch - 2ms/step

Epoch 163/500

1/1 - 0s - loss: 1.3909 - accuracy: 0.8095 - 2ms/epoch - 2ms/step

Epoch 164/500

1/1 - 0s - loss: 1.3729 - accuracy: 0.8095 - 2ms/epoch - 2ms/step

Epoch 165/500

1/1 - 0s - loss: 1.3549 - accuracy: 0.8095 - 2ms/epoch - 2ms/step

Epoch 166/500

1/1 - 0s - loss: 1.3371 - accuracy: 0.8095 - 1ms/epoch - 1ms/step

Epoch 167/500

1/1 - 0s - loss: 1.3193 - accuracy: 0.8095 - 1ms/epoch - 1ms/step

Epoch 168/500

1/1 - 0s - loss: 1.3017 - accuracy: 0.8095 - 1ms/epoch - 1ms/step

Epoch 169/500

1/1 - 0s - loss: 1.2841 - accuracy: 0.8095 - 1ms/epoch - 1ms/step

Epoch 170/500

1/1 - 0s - loss: 1.2667 - accuracy: 0.8095 - 1ms/epoch - 1ms/step

Epoch 171/500

1/1 - 0s - loss: 1.2493 - accuracy: 0.8095 - 2ms/epoch - 2ms/step

Epoch 172/500

1/1 - 0s - loss: 1.2321 - accuracy: 0.8095 - 3ms/epoch - 3ms/step

Epoch 173/500

1/1 - 0s - loss: 1.2150 - accuracy: 0.8095 - 2ms/epoch - 2ms/step

Epoch 174/500

1/1 - 0s - loss: 1.1980 - accuracy: 0.8095 - 2ms/epoch - 2ms/step

Epoch 175/500

1/1 - 0s - loss: 1.1811 - accuracy: 0.8095 - 3ms/epoch - 3ms/step

Epoch 176/500

1/1 - 0s - loss: 1.1643 - accuracy: 0.8095 - 2ms/epoch - 2ms/step

Epoch 177/500

1/1 - 0s - loss: 1.1477 - accuracy: 0.8095 - 2ms/epoch - 2ms/step

Epoch 178/500

1/1 - 0s - loss: 1.1313 - accuracy: 0.8095 - 1ms/epoch - 1ms/step

Epoch 179/500

1/1 - 0s - loss: 1.1150 - accuracy: 0.8095 - 2ms/epoch - 2ms/step

Epoch 180/500

1/1 - 0s - loss: 1.0988 - accuracy: 0.8095 - 1ms/epoch - 1ms/step

Epoch 181/500

1/1 - 0s - loss: 1.0828 - accuracy: 0.8095 - 1ms/epoch - 1ms/step

Epoch 182/500

1/1 - 0s - loss: 1.0669 - accuracy: 0.8095 - 1ms/epoch - 1ms/step

Epoch 183/500

1/1 - 0s - loss: 1.0512 - accuracy: 0.8095 - 1ms/epoch - 1ms/step

Epoch 184/500

1/1 - 0s - loss: 1.0357 - accuracy: 0.8095 - 1ms/epoch - 1ms/step

Epoch 185/500

1/1 - 0s - loss: 1.0204 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 186/500

1/1 - 0s - loss: 1.0052 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 187/500

1/1 - 0s - loss: 0.9902 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 188/500

1/1 - 0s - loss: 0.9754 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 189/500

1/1 - 0s - loss: 0.9608 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 190/500

1/1 - 0s - loss: 0.9464 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 191/500

1/1 - 0s - loss: 0.9321 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 192/500

1/1 - 0s - loss: 0.9181 - accuracy: 0.8571 - 3ms/epoch - 3ms/step

Epoch 193/500

1/1 - 0s - loss: 0.9043 - accuracy: 0.8571 - 3ms/epoch - 3ms/step

Epoch 194/500

1/1 - 0s - loss: 0.8906 - accuracy: 0.8571 - 3ms/epoch - 3ms/step

Epoch 195/500

1/1 - 0s - loss: 0.8772 - accuracy: 0.8571 - 3ms/epoch - 3ms/step

Epoch 196/500

1/1 - 0s - loss: 0.8640 - accuracy: 0.8571 - 4ms/epoch - 4ms/step

Epoch 197/500

1/1 - 0s - loss: 0.8510 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 198/500

1/1 - 0s - loss: 0.8382 - accuracy: 0.8571 - 4ms/epoch - 4ms/step

Epoch 199/500

1/1 - 0s - loss: 0.8256 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 200/500

1/1 - 0s - loss: 0.8132 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 201/500

1/1 - 0s - loss: 0.8011 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 202/500

1/1 - 0s - loss: 0.7892 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 203/500

1/1 - 0s - loss: 0.7774 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 204/500

1/1 - 0s - loss: 0.7659 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 205/500

1/1 - 0s - loss: 0.7546 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 206/500

1/1 - 0s - loss: 0.7435 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 207/500

1/1 - 0s - loss: 0.7327 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 208/500

1/1 - 0s - loss: 0.7220 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 209/500

1/1 - 0s - loss: 0.7116 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 210/500

1/1 - 0s - loss: 0.7013 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 211/500

1/1 - 0s - loss: 0.6913 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 212/500

1/1 - 0s - loss: 0.6815 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 213/500

1/1 - 0s - loss: 0.6719 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 214/500

1/1 - 0s - loss: 0.6625 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 215/500

1/1 - 0s - loss: 0.6533 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 216/500

1/1 - 0s - loss: 0.6443 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 217/500

1/1 - 0s - loss: 0.6355 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 218/500

1/1 - 0s - loss: 0.6269 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 219/500

1/1 - 0s - loss: 0.6184 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 220/500

1/1 - 0s - loss: 0.6102 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 221/500

1/1 - 0s - loss: 0.6022 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 222/500

1/1 - 0s - loss: 0.5943 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 223/500

1/1 - 0s - loss: 0.5866 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 224/500

1/1 - 0s - loss: 0.5791 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 225/500

1/1 - 0s - loss: 0.5718 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 226/500

1/1 - 0s - loss: 0.5647 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 227/500

1/1 - 0s - loss: 0.5577 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 228/500

1/1 - 0s - loss: 0.5509 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 229/500

1/1 - 0s - loss: 0.5442 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 230/500

1/1 - 0s - loss: 0.5378 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 231/500

1/1 - 0s - loss: 0.5314 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 232/500

1/1 - 0s - loss: 0.5253 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 233/500

1/1 - 0s - loss: 0.5192 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 234/500

1/1 - 0s - loss: 0.5134 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 235/500

1/1 - 0s - loss: 0.5076 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 236/500

1/1 - 0s - loss: 0.5020 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 237/500

1/1 - 0s - loss: 0.4966 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 238/500

1/1 - 0s - loss: 0.4913 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 239/500

1/1 - 0s - loss: 0.4861 - accuracy: 0.8571 - 3ms/epoch - 3ms/step

Epoch 240/500

1/1 - 0s - loss: 0.4810 - accuracy: 0.8571 - 3ms/epoch - 3ms/step

Epoch 241/500

1/1 - 0s - loss: 0.4761 - accuracy: 0.8571 - 3ms/epoch - 3ms/step

Epoch 242/500

1/1 - 0s - loss: 0.4713 - accuracy: 0.8571 - 3ms/epoch - 3ms/step

Epoch 243/500

1/1 - 0s - loss: 0.4666 - accuracy: 0.8571 - 3ms/epoch - 3ms/step

Epoch 244/500

1/1 - 0s - loss: 0.4620 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 245/500

1/1 - 0s - loss: 0.4576 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 246/500

1/1 - 0s - loss: 0.4532 - accuracy: 0.8571 - 4ms/epoch - 4ms/step

Epoch 247/500

1/1 - 0s - loss: 0.4490 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 248/500

1/1 - 0s - loss: 0.4449 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 249/500

1/1 - 0s - loss: 0.4409 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 250/500

1/1 - 0s - loss: 0.4369 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 251/500

1/1 - 0s - loss: 0.4331 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 252/500

1/1 - 0s - loss: 0.4294 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 253/500

1/1 - 0s - loss: 0.4257 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 254/500

1/1 - 0s - loss: 0.4222 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 255/500

1/1 - 0s - loss: 0.4187 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 256/500

1/1 - 0s - loss: 0.4154 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 257/500

1/1 - 0s - loss: 0.4121 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 258/500

1/1 - 0s - loss: 0.4089 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 259/500

1/1 - 0s - loss: 0.4058 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 260/500

1/1 - 0s - loss: 0.4027 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 261/500

1/1 - 0s - loss: 0.3997 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 262/500

1/1 - 0s - loss: 0.3968 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 263/500

1/1 - 0s - loss: 0.3940 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 264/500

1/1 - 0s - loss: 0.3912 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 265/500

1/1 - 0s - loss: 0.3885 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 266/500

1/1 - 0s - loss: 0.3859 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 267/500

1/1 - 0s - loss: 0.3833 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 268/500

1/1 - 0s - loss: 0.3808 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 269/500

1/1 - 0s - loss: 0.3784 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 270/500

1/1 - 0s - loss: 0.3760 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 271/500

1/1 - 0s - loss: 0.3736 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 272/500

1/1 - 0s - loss: 0.3713 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 273/500

1/1 - 0s - loss: 0.3691 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 274/500

1/1 - 0s - loss: 0.3669 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 275/500

1/1 - 0s - loss: 0.3648 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 276/500

1/1 - 0s - loss: 0.3627 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 277/500

1/1 - 0s - loss: 0.3607 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 278/500

1/1 - 0s - loss: 0.3587 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 279/500

1/1 - 0s - loss: 0.3568 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 280/500

1/1 - 0s - loss: 0.3549 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 281/500

1/1 - 0s - loss: 0.3530 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 282/500

1/1 - 0s - loss: 0.3512 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 283/500

1/1 - 0s - loss: 0.3494 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 284/500

1/1 - 0s - loss: 0.3476 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 285/500

1/1 - 0s - loss: 0.3459 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 286/500

1/1 - 0s - loss: 0.3443 - accuracy: 0.8571 - 3ms/epoch - 3ms/step

Epoch 287/500

1/1 - 0s - loss: 0.3426 - accuracy: 0.8571 - 3ms/epoch - 3ms/step

Epoch 288/500

1/1 - 0s - loss: 0.3410 - accuracy: 0.8571 - 4ms/epoch - 4ms/step

Epoch 289/500

1/1 - 0s - loss: 0.3395 - accuracy: 0.8571 - 3ms/epoch - 3ms/step

Epoch 290/500

1/1 - 0s - loss: 0.3379 - accuracy: 0.8571 - 3ms/epoch - 3ms/step

Epoch 291/500

1/1 - 0s - loss: 0.3364 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 292/500

1/1 - 0s - loss: 0.3349 - accuracy: 0.8571 - 3ms/epoch - 3ms/step

Epoch 293/500

1/1 - 0s - loss: 0.3335 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 294/500

1/1 - 0s - loss: 0.3321 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 295/500

1/1 - 0s - loss: 0.3307 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 296/500

1/1 - 0s - loss: 0.3293 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 297/500

1/1 - 0s - loss: 0.3280 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 298/500

1/1 - 0s - loss: 0.3267 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 299/500

1/1 - 0s - loss: 0.3254 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 300/500

1/1 - 0s - loss: 0.3241 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 301/500

1/1 - 0s - loss: 0.3229 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 302/500

1/1 - 0s - loss: 0.3217 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 303/500

1/1 - 0s - loss: 0.3205 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 304/500

1/1 - 0s - loss: 0.3193 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 305/500

1/1 - 0s - loss: 0.3181 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 306/500

1/1 - 0s - loss: 0.3170 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 307/500

1/1 - 0s - loss: 0.3159 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 308/500

1/1 - 0s - loss: 0.3148 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 309/500

1/1 - 0s - loss: 0.3137 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 310/500

1/1 - 0s - loss: 0.3127 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 311/500

1/1 - 0s - loss: 0.3116 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 312/500

1/1 - 0s - loss: 0.3106 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 313/500

1/1 - 0s - loss: 0.3096 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 314/500

1/1 - 0s - loss: 0.3086 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 315/500

1/1 - 0s - loss: 0.3076 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 316/500

1/1 - 0s - loss: 0.3066 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 317/500

1/1 - 0s - loss: 0.3057 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 318/500

1/1 - 0s - loss: 0.3048 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 319/500

1/1 - 0s - loss: 0.3038 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 320/500

1/1 - 0s - loss: 0.3029 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 321/500

1/1 - 0s - loss: 0.3021 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 322/500

1/1 - 0s - loss: 0.3012 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 323/500

1/1 - 0s - loss: 0.3003 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 324/500

1/1 - 0s - loss: 0.2995 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 325/500

1/1 - 0s - loss: 0.2986 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 326/500

1/1 - 0s - loss: 0.2978 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 327/500

1/1 - 0s - loss: 0.2970 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 328/500

1/1 - 0s - loss: 0.2962 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 329/500

1/1 - 0s - loss: 0.2954 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 330/500

1/1 - 0s - loss: 0.2946 - accuracy: 0.8571 - 3ms/epoch - 3ms/step

Epoch 331/500

1/1 - 0s - loss: 0.2938 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 332/500

1/1 - 0s - loss: 0.2931 - accuracy: 0.8571 - 3ms/epoch - 3ms/step

Epoch 333/500

1/1 - 0s - loss: 0.2923 - accuracy: 0.8571 - 4ms/epoch - 4ms/step

Epoch 334/500

1/1 - 0s - loss: 0.2916 - accuracy: 0.8571 - 3ms/epoch - 3ms/step

Epoch 335/500

1/1 - 0s - loss: 0.2908 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 336/500

1/1 - 0s - loss: 0.2901 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 337/500

1/1 - 0s - loss: 0.2894 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 338/500

1/1 - 0s - loss: 0.2887 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 339/500

1/1 - 0s - loss: 0.2880 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 340/500

1/1 - 0s - loss: 0.2873 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 341/500

1/1 - 0s - loss: 0.2866 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 342/500

1/1 - 0s - loss: 0.2860 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 343/500

1/1 - 0s - loss: 0.2853 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 344/500

1/1 - 0s - loss: 0.2847 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 345/500

1/1 - 0s - loss: 0.2840 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 346/500

1/1 - 0s - loss: 0.2834 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 347/500

1/1 - 0s - loss: 0.2828 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 348/500

1/1 - 0s - loss: 0.2822 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 349/500

1/1 - 0s - loss: 0.2815 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 350/500

1/1 - 0s - loss: 0.2809 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 351/500

1/1 - 0s - loss: 0.2803 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 352/500

1/1 - 0s - loss: 0.2798 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 353/500

1/1 - 0s - loss: 0.2792 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 354/500

1/1 - 0s - loss: 0.2786 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 355/500

1/1 - 0s - loss: 0.2780 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 356/500

1/1 - 0s - loss: 0.2775 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 357/500

1/1 - 0s - loss: 0.2769 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 358/500

1/1 - 0s - loss: 0.2764 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 359/500

1/1 - 0s - loss: 0.2758 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 360/500

1/1 - 0s - loss: 0.2753 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 361/500

1/1 - 0s - loss: 0.2748 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 362/500

1/1 - 0s - loss: 0.2743 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 363/500

1/1 - 0s - loss: 0.2737 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 364/500

1/1 - 0s - loss: 0.2732 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 365/500

1/1 - 0s - loss: 0.2727 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 366/500

1/1 - 0s - loss: 0.2722 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 367/500

1/1 - 0s - loss: 0.2718 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 368/500

1/1 - 0s - loss: 0.2713 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 369/500

1/1 - 0s - loss: 0.2708 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 370/500

1/1 - 0s - loss: 0.2703 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 371/500

1/1 - 0s - loss: 0.2699 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 372/500

1/1 - 0s - loss: 0.2694 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 373/500

1/1 - 0s - loss: 0.2689 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 374/500

1/1 - 0s - loss: 0.2685 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 375/500

1/1 - 0s - loss: 0.2681 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 376/500

1/1 - 0s - loss: 0.2676 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 377/500

1/1 - 0s - loss: 0.2672 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 378/500

1/1 - 0s - loss: 0.2668 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 379/500

1/1 - 0s - loss: 0.2664 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 380/500

1/1 - 0s - loss: 0.2659 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 381/500

1/1 - 0s - loss: 0.2655 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 382/500

1/1 - 0s - loss: 0.2651 - accuracy: 0.8571 - 5ms/epoch - 5ms/step

Epoch 383/500

1/1 - 0s - loss: 0.2647 - accuracy: 0.8571 - 3ms/epoch - 3ms/step

Epoch 384/500

1/1 - 0s - loss: 0.2643 - accuracy: 0.8571 - 3ms/epoch - 3ms/step

Epoch 385/500

1/1 - 0s - loss: 0.2639 - accuracy: 0.8571 - 3ms/epoch - 3ms/step

Epoch 386/500

1/1 - 0s - loss: 0.2636 - accuracy: 0.8571 - 3ms/epoch - 3ms/step

Epoch 387/500

1/1 - 0s - loss: 0.2632 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 388/500

1/1 - 0s - loss: 0.2628 - accuracy: 0.8571 - 3ms/epoch - 3ms/step

Epoch 389/500

1/1 - 0s - loss: 0.2624 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 390/500

1/1 - 0s - loss: 0.2621 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 391/500

1/1 - 0s - loss: 0.2617 - accuracy: 0.8571 - 4ms/epoch - 4ms/step

Epoch 392/500

1/1 - 0s - loss: 0.2614 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 393/500

1/1 - 0s - loss: 0.2610 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 394/500

1/1 - 0s - loss: 0.2607 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 395/500

1/1 - 0s - loss: 0.2603 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 396/500

1/1 - 0s - loss: 0.2600 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 397/500

1/1 - 0s - loss: 0.2597 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 398/500

1/1 - 0s - loss: 0.2593 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 399/500

1/1 - 0s - loss: 0.2590 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 400/500

1/1 - 0s - loss: 0.2587 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 401/500

1/1 - 0s - loss: 0.2584 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 402/500

1/1 - 0s - loss: 0.2581 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 403/500

1/1 - 0s - loss: 0.2578 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 404/500

1/1 - 0s - loss: 0.2575 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 405/500

1/1 - 0s - loss: 0.2572 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 406/500

1/1 - 0s - loss: 0.2569 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 407/500

1/1 - 0s - loss: 0.2566 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 408/500

1/1 - 0s - loss: 0.2563 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 409/500

1/1 - 0s - loss: 0.2560 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 410/500

1/1 - 0s - loss: 0.2557 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 411/500

1/1 - 0s - loss: 0.2555 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 412/500

1/1 - 0s - loss: 0.2552 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 413/500

1/1 - 0s - loss: 0.2549 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 414/500

1/1 - 0s - loss: 0.2547 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 415/500

1/1 - 0s - loss: 0.2544 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 416/500

1/1 - 0s - loss: 0.2541 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 417/500

1/1 - 0s - loss: 0.2539 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 418/500

1/1 - 0s - loss: 0.2536 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 419/500

1/1 - 0s - loss: 0.2534 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 420/500

1/1 - 0s - loss: 0.2532 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 421/500

1/1 - 0s - loss: 0.2529 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 422/500

1/1 - 0s - loss: 0.2527 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 423/500

1/1 - 0s - loss: 0.2524 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 424/500

1/1 - 0s - loss: 0.2522 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 425/500

1/1 - 0s - loss: 0.2520 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 426/500

1/1 - 0s - loss: 0.2517 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 427/500

1/1 - 0s - loss: 0.2515 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 428/500

1/1 - 0s - loss: 0.2513 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 429/500

1/1 - 0s - loss: 0.2511 - accuracy: 0.8571 - 3ms/epoch - 3ms/step

Epoch 430/500

1/1 - 0s - loss: 0.2509 - accuracy: 0.8571 - 3ms/epoch - 3ms/step

Epoch 431/500

1/1 - 0s - loss: 0.2507 - accuracy: 0.8571 - 3ms/epoch - 3ms/step

Epoch 432/500

1/1 - 0s - loss: 0.2504 - accuracy: 0.8571 - 3ms/epoch - 3ms/step

Epoch 433/500

1/1 - 0s - loss: 0.2502 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 434/500

1/1 - 0s - loss: 0.2500 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 435/500

1/1 - 0s - loss: 0.2498 - accuracy: 0.8571 - 3ms/epoch - 3ms/step

Epoch 436/500

1/1 - 0s - loss: 0.2496 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 437/500

1/1 - 0s - loss: 0.2494 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 438/500

1/1 - 0s - loss: 0.2492 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 439/500

1/1 - 0s - loss: 0.2491 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 440/500

1/1 - 0s - loss: 0.2489 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 441/500

1/1 - 0s - loss: 0.2487 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 442/500

1/1 - 0s - loss: 0.2485 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 443/500

1/1 - 0s - loss: 0.2483 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 444/500

1/1 - 0s - loss: 0.2481 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 445/500

1/1 - 0s - loss: 0.2479 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 446/500

1/1 - 0s - loss: 0.2478 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 447/500

1/1 - 0s - loss: 0.2476 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 448/500

1/1 - 0s - loss: 0.2474 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 449/500

1/1 - 0s - loss: 0.2472 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 450/500

1/1 - 0s - loss: 0.2471 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 451/500

1/1 - 0s - loss: 0.2469 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 452/500

1/1 - 0s - loss: 0.2467 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 453/500

1/1 - 0s - loss: 0.2466 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 454/500

1/1 - 0s - loss: 0.2464 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 455/500

1/1 - 0s - loss: 0.2463 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 456/500

1/1 - 0s - loss: 0.2461 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 457/500

1/1 - 0s - loss: 0.2459 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 458/500

1/1 - 0s - loss: 0.2458 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 459/500

1/1 - 0s - loss: 0.2456 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 460/500

1/1 - 0s - loss: 0.2455 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 461/500

1/1 - 0s - loss: 0.2453 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 462/500

1/1 - 0s - loss: 0.2452 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 463/500

1/1 - 0s - loss: 0.2450 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 464/500

1/1 - 0s - loss: 0.2449 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 465/500

1/1 - 0s - loss: 0.2448 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 466/500

1/1 - 0s - loss: 0.2446 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 467/500

1/1 - 0s - loss: 0.2445 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 468/500

1/1 - 0s - loss: 0.2443 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 469/500

1/1 - 0s - loss: 0.2442 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 470/500

1/1 - 0s - loss: 0.2441 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 471/500

1/1 - 0s - loss: 0.2439 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 472/500

1/1 - 0s - loss: 0.2438 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 473/500

1/1 - 0s - loss: 0.2437 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 474/500

1/1 - 0s - loss: 0.2435 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 475/500

1/1 - 0s - loss: 0.2434 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 476/500

1/1 - 0s - loss: 0.2433 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 477/500

1/1 - 0s - loss: 0.2431 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 478/500

1/1 - 0s - loss: 0.2430 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 479/500

1/1 - 0s - loss: 0.2429 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 480/500

1/1 - 0s - loss: 0.2428 - accuracy: 0.8571 - 3ms/epoch - 3ms/step

Epoch 481/500

1/1 - 0s - loss: 0.2427 - accuracy: 0.8571 - 4ms/epoch - 4ms/step

Epoch 482/500

1/1 - 0s - loss: 0.2425 - accuracy: 0.8571 - 3ms/epoch - 3ms/step

Epoch 483/500

1/1 - 0s - loss: 0.2424 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 484/500

1/1 - 0s - loss: 0.2423 - accuracy: 0.8571 - 4ms/epoch - 4ms/step

Epoch 485/500

1/1 - 0s - loss: 0.2422 - accuracy: 0.8571 - 4ms/epoch - 4ms/step

Epoch 486/500

1/1 - 0s - loss: 0.2421 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 487/500

1/1 - 0s - loss: 0.2420 - accuracy: 0.8571 - 3ms/epoch - 3ms/step

Epoch 488/500

1/1 - 0s - loss: 0.2418 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 489/500

1/1 - 0s - loss: 0.2417 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 490/500

1/1 - 0s - loss: 0.2416 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 491/500

1/1 - 0s - loss: 0.2415 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 492/500

1/1 - 0s - loss: 0.2414 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 493/500

1/1 - 0s - loss: 0.2413 - accuracy: 0.8571 - 2ms/epoch - 2ms/step

Epoch 494/500

1/1 - 0s - loss: 0.2412 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 495/500

1/1 - 0s - loss: 0.2411 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 496/500

1/1 - 0s - loss: 0.2410 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 497/500

1/1 - 0s - loss: 0.2409 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 498/500

1/1 - 0s - loss: 0.2408 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 499/500

1/1 - 0s - loss: 0.2407 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

Epoch 500/500

1/1 - 0s - loss: 0.2406 - accuracy: 0.8571 - 1ms/epoch - 1ms/step

<keras.src.callbacks.History at 0x16f059a90>

plot_model(model, show_shapes= True)

Text Generation Using the Model#

After we trained the bigram-based LM, we can use the model for text generation (e.g., a one-to-many sequence-to-sequence application).

We can implement a simple text generator: the model always outputs the next-word that has the highest predicted probability value from the neural LM.

At every time step, the model will use the newly predicted word as the input for another next-word prediction.

# generate a sequence from the model

def generate_seq(model, tokenizer, seed_text, n_words):

in_text, result = seed_text, seed_text

# generate a fixed number of words

for _ in range(n_words):

# encode the text as integer

encoded = tokenizer.texts_to_sequences([in_text])[0]

encoded = np.array(encoded)

# predict a word in the vocabulary

yhat=np.argmax(model.predict(encoded), axis=-1)

# map predicted word index to word

out_word = ''

for word, index in tokenizer.word_index.items():

if index == yhat:

out_word = word

break

# append to input

in_text, result = out_word, result + ' ' + out_word

return result

In the above

generate_seq(), we use a greedy search, which selects the most likely word at each time step in the output sequence.While this approach features its efficiency, the quality of the final output sequences may not necessarily be optimal.

# evaluate

print(generate_seq(model, tokenizer, 'Jill', 10))

1/1 [==============================] - 0s 144ms/step

1/1 [==============================] - 0s 8ms/step

1/1 [==============================] - 0s 8ms/step

1/1 [==============================] - 0s 7ms/step

1/1 [==============================] - 0s 8ms/step

1/1 [==============================] - 0s 8ms/step

1/1 [==============================] - 0s 7ms/step

1/1 [==============================] - 0s 7ms/step

1/1 [==============================] - 0s 8ms/step

1/1 [==============================] - 0s 8ms/step

Jill went up the hill and jill went up the hill

Sampling Strategies for Text Generation#

Given a trained language model and a seed text chunk, we can generate new text by greedy-search like we’ve seen above.

But we may sometimes have to add a certain degree of variation to the robotic texts for linguistic creativity.

Possible alternatives:

We can re-normalize the predicted probability distributions of all next-words to reduce probability differences between the highest and the lowest. (Please see Ch.8.1 Text Generation with LSTM in Chollet’s Deep Learning with Python. You will need this strategy for the assignment.)

We can use non-greedy search by keeping the top k probable candidates in the list for next-word prediction. (cf. Beam Search below) and determine the tokens by choosing the sequence of the maximum probability.

Beam Search (skipped)#

Searching in NLP#

In the previous demonstration, when we generate the predicted next word, we adopt a naive approach, i.e., always choosing the word of the highest probability.

It is common in NLP for models to output a probability distribution over words in the vocabulary.

This step involves searching through all the possible output sequences based on their likelihood.

Choosing the next word of highest probability does not guarantee us the most optimal sequence.

The search problem is exponential in the length of the output sequence given the large size of vocabulary.

Beam Search Decoding#

The beam search expands all possible next steps and keeps the \(k\) most likely, where \(k\) is a researcher-specified parameter and controls the number of beams or parallel searches through the sequence of probabilities.

The search process can stop for each candidate independently either by:

reaching a maximum length

reaching an end-of-sequence token

reaching a threshold likelihood

Please see Jason Brownlee's blog post [How to Implement a Beam Search Decoder for Natural Language Processing](https://machinelearningmastery.com/beam-search-decoder-natural-language-processing/) for the python implementation.

The following codes are based on Jason's code.

Warning

The following codes may not work properly. In Beam Search, when the model predicts None as the next character, we should set it as a stopping condition. The following codes have not be optimized with respect to this.

# generate a sequence from the model

def generate_seq_beam(model, tokenizer, seed_text, n_words, k):

in_text = seed_text

sequences = [[[in_text], 0.0]]

# prepare id_2_word map

id_2_word = dict([(i,w) for (w, i) in tokenizer.word_index.items()])

# start next-word generating

for _ in range(n_words):

all_candidates = list()

#print("Next word ", _+1)

# temp list to hold all possible candidates

# `sequence + next words`

# for each existing sequence

# take the last word of the sequence

# find probs of all words in the next position

# save the top k

# all_candidates should have 3 * 22 = 66 candidates

for i in range(len(sequences)):

# for the current sequence

seq, score = sequences[i]

# next word probablity distribution

encoded = tokenizer.texts_to_sequences([seq[-1]])[0]

encoded = np.array(encoded)

model_pred_prob = model.predict(encoded).flatten()

# compute all probabilities for `curent_sequence + all_possible_next_word`

for j in range(len(model_pred_prob)):

candidate = [seq + [id_2_word.get(j+1)], score-np.log(model_pred_prob[j])]

all_candidates.append(candidate)

all_candidates= [[seq, score] for seq, score in all_candidates if seq[-1] is not None]

# order all candidates (seqence + nextword) by score

#print("all_condidates length:", len(all_candidates))

ordered = sorted(all_candidates, key = lambda x:x[1]) # default ascending

# select k best

sequences = ordered[:k] ## choose top k

return sequences

generate_seq_beam(model, tokenizer, 'Jill', 5, k =10)

1/1 [==============================] - 0s 8ms/step

1/1 [==============================] - 0s 8ms/step

1/1 [==============================] - 0s 7ms/step

1/1 [==============================] - 0s 8ms/step

1/1 [==============================] - 0s 8ms/step

1/1 [==============================] - 0s 8ms/step

1/1 [==============================] - 0s 7ms/step

1/1 [==============================] - 0s 7ms/step

1/1 [==============================] - 0s 8ms/step

1/1 [==============================] - 0s 8ms/step

1/1 [==============================] - 0s 7ms/step

1/1 [==============================] - 0s 7ms/step

1/1 [==============================] - 0s 7ms/step

1/1 [==============================] - 0s 7ms/step

1/1 [==============================] - 0s 8ms/step

1/1 [==============================] - 0s 8ms/step

1/1 [==============================] - 0s 7ms/step

1/1 [==============================] - 0s 7ms/step

1/1 [==============================] - 0s 7ms/step

1/1 [==============================] - 0s 7ms/step

1/1 [==============================] - 0s 8ms/step

1/1 [==============================] - 0s 7ms/step

1/1 [==============================] - 0s 7ms/step

1/1 [==============================] - 0s 7ms/step

1/1 [==============================] - 0s 10ms/step

1/1 [==============================] - 0s 9ms/step

1/1 [==============================] - 0s 8ms/step

1/1 [==============================] - 0s 8ms/step

1/1 [==============================] - 0s 7ms/step

1/1 [==============================] - 0s 7ms/step

1/1 [==============================] - 0s 7ms/step

1/1 [==============================] - 0s 7ms/step

1/1 [==============================] - 0s 7ms/step

1/1 [==============================] - 0s 8ms/step

1/1 [==============================] - 0s 8ms/step

1/1 [==============================] - 0s 8ms/step

1/1 [==============================] - 0s 7ms/step

1/1 [==============================] - 0s 8ms/step

1/1 [==============================] - 0s 8ms/step

1/1 [==============================] - 0s 8ms/step

1/1 [==============================] - 0s 7ms/step

[[['Jill', 'up', 'hill', 'jack', 'jack', 'jack'], 4.68290707655251],

[['Jill', 'up', 'hill', 'jack', 'jack', 'down'], 4.72132352180779],

[['Jill', 'up', 'hill', 'jack', 'jack', 'down', 'jack'], 4.762729896232486],

[['Jill', 'up', 'hill', 'jack', 'jack', 'down', 'jack', 'jack'],

5.449550641700625],

[['Jill', 'up', 'hill', 'jack', 'jack', 'down', 'jack', 'down'],

5.487967086955905],

[['Jill', 'up', 'hill', 'jack', 'jack', 'down', 'jack', 'down', 'jack'],

5.529373461380601],

[['Jill', 'up', 'hill', 'jack', 'jack', 'down', 'jack', 'jack', 'jack'],

6.136371387168765],

[['Jill', 'up', 'hill', 'jack', 'jack', 'down', 'jack', 'jack', 'down'],

6.174787832424045],

[['Jill',

'up',

'hill',

'jack',

'jack',

'down',

'jack',

'down',

'jack',

'jack'],

6.216194206848741],

[['Jill',

'up',

'hill',

'jack',

'jack',

'down',

'jack',

'jack',

'down',

'jack'],

6.216194206848741]]

References#

Check Ch 7 Neural Networks and Neural Language Models in Speech and Language Processing (3rd ed. draft).

Chollet (2017): Ch 8.1

Check Jason Brownlee’s blog post How to Develop Word-Based Neural Language Models in Python with Keras