Chapter 3 Creating Corpus

Linguistic data are important to us linguists. Data usually tell us something we don’t know, or something we are not sure of. In this chapter, I would like to show you a quick way to extract linguistic data from web pages, which is by now undoubtedly the largest source of textual data available.

While there are many existing text data collections (cf. Structured Corpus and XML), chances are that sometimes you still need to collect your own data for a particular research question. But please note that when you are creating your own corpus for specific research questions, always pay attention to the three important criteria: representativeness, authenticity, and size.

Following the spirit of tidy , we will mainly do our tasks with the libraries of tidyverse and rvests.

If you are new to tidyverse R, please check its official webpage for learning resources.

## Uncomment the following line for installation

# install.packages(c("tidyverse", "rvest"))

library(tidyverse)

library(rvest)3.1 HTML Structure

The HyperText Markup Language, or HTML is the standard markup language for documents designed to be displayed in a web browser.

3.1.1 HTML Syntax

To illustrate the structure of the HTML, please download the sample html file from: demo_data/data-sample-html.html and first open it with your browser.

<!DOCTYPE html>

<html>

<head>

<title>My First HTML </title>

</head>

<body>

<h1> Introduction </h1>

<p> Have you ever read the source code of a html page? This is how to get back to the course page: <a href="https://alvinntnu.github.io/NTNU_ENC2036_LECTURES/", target="_blank">ENC2036</a>. </p>

<h1> Contents of the Page </h1>

<p> Anything you can say about the page.....</p>

</body>

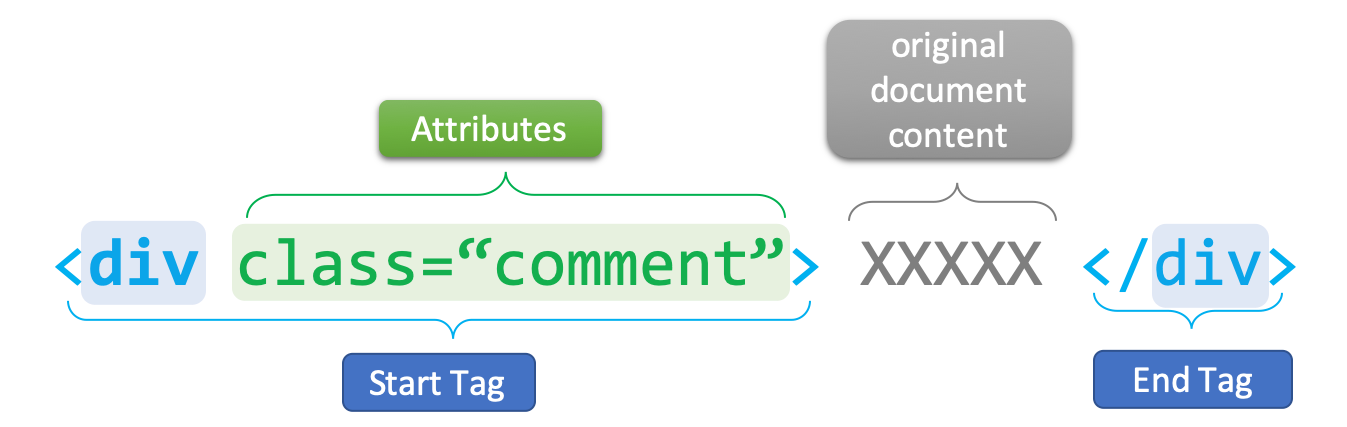

</html>An HTML document includes several important elements (cf. Figure 3.1):

DTD: document type definition which informs the browser about the version of the HTML standard that the document adheres to (e.g.,<!DOCTYPE HTML>)element: the combination of start tag, content, and end tag (e.g,<title>My First HTML</title>)tag: named braces that enclose the content and define its structural function (e.g.,title,body,p)attribute: specific properties of the tag, which are often placed in the start end of the element (e.g.,<a href= "index.html"> Homepage </a>). They are expressed asname = "value"pairs.

Figure 3.1: Syntax of An HTML Tag Element

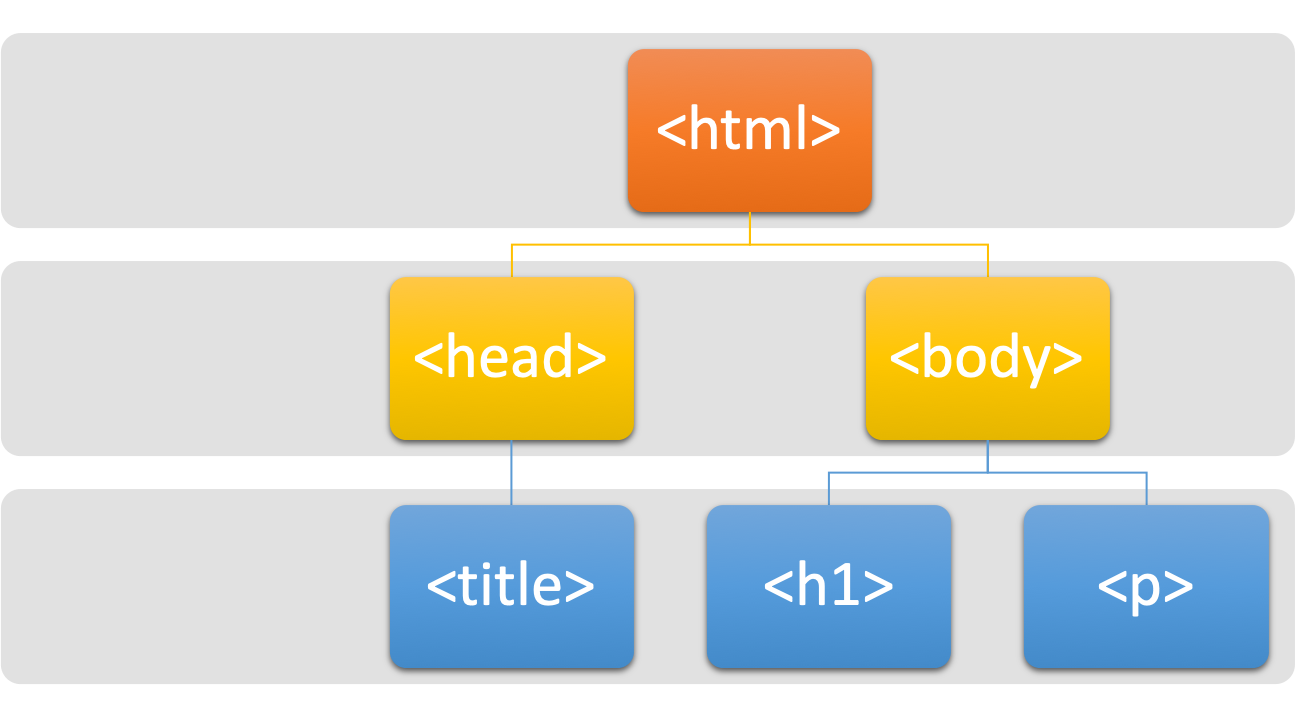

An HTML document starts with the root element <html>, which splits into two branches, <head> and <body>.

- Most of the webpage textual contents would go into the

<body>part. - Most of the web-related codes and metadata (e.g., javascripts, CSS) are often included in the

<head>part.

All elements need to be strictly nested within each other in a well-formed and valid HTML file, as shown in Figure 3.2.

Figure 3.2: Tree Structure of An HTML Document

3.1.3 CSS

Cascading Style Sheet (CSS) is a language for describing the layout of HTML and other markup documents (e.g., XML).

HTML + CSS is by now the standard way to create and design web pages. The idea is that CSS specifies the formats/styles of the HTML elements. The following is an example of the CSS:

div.warnings {

color: pink;

font-family: "Arial"

font-size: 120%

}

h1 {

padding-top: 20px

padding-bottom: 20px

}You probably would wonder how to link a set of CSS style definitions to an HTML document. There are in general three ways: inline, internal and external. You can learn more about this in W3School.com.

Here I will show you an example of the internal method. Below is a CSS style definition for <h1>.

h1 {

color: red;

margin-bottom: 2em;

}We can embed this within a <style>...</style> element. Then you put the entire <style> element under <head> of the HTML file you would like to style.

<style>

h1 {

color: red;

margin-bottom: 1.5em;

}

</style>After you include the <style> in the HTML file, refresh the web page to see if the CSS style works.

3.2 Web Crawling

In the following demonstration, the text data scraped from the PTT forum is presented as it is without adjustment. However, please note that the language on PTT may strike some readers as profane, vulgar or even offensive.

library(tidyverse)

library(rvest)In this tutorial, let’s assume that we like to scrape texts from PTT Forum. In particular, we will demonstrate how to scrape texts from the Gossiping board of PTT.

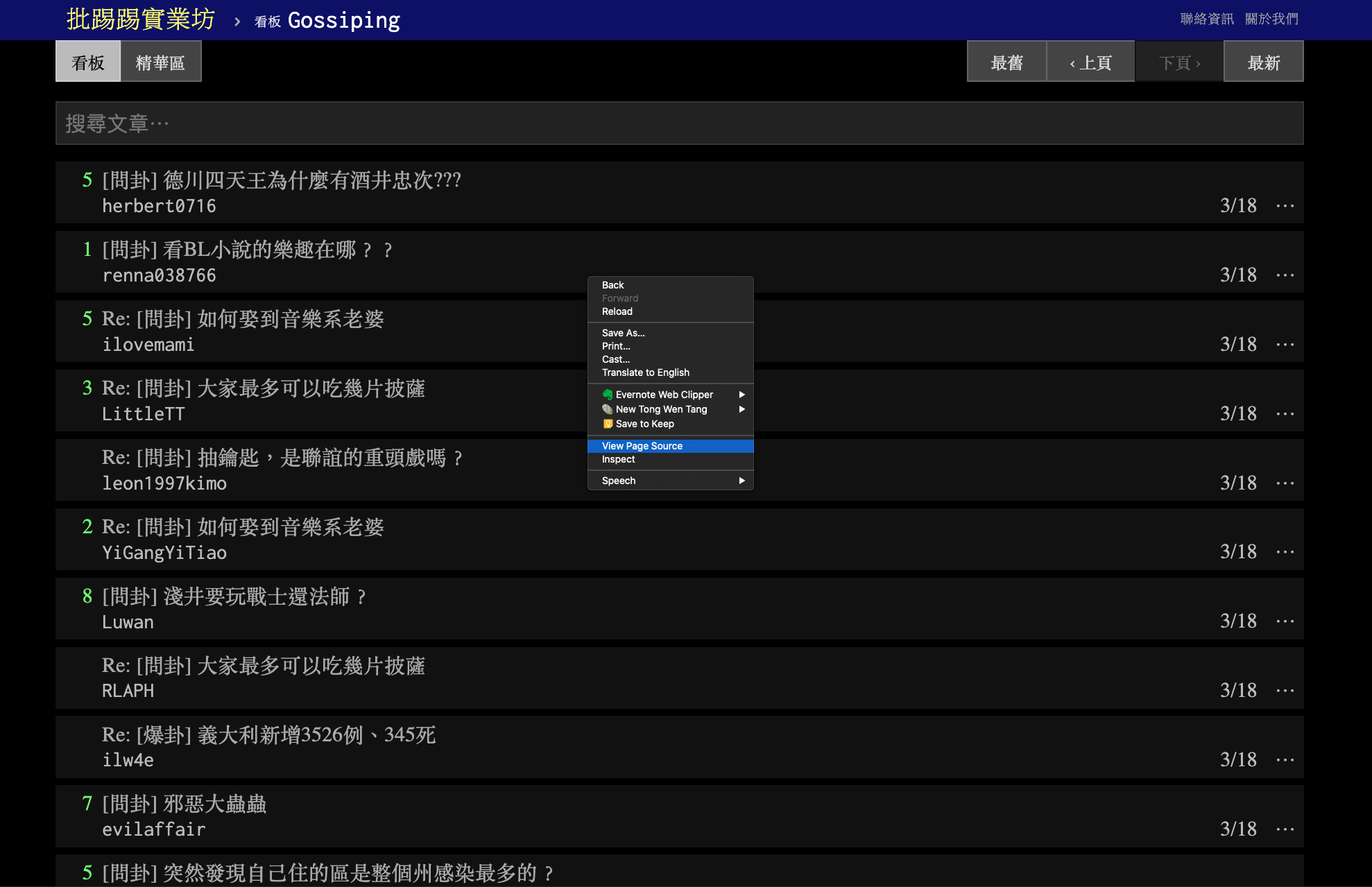

ptt.url <- "https://www.ptt.cc/bbs/Gossiping"If you use your browser to view PTT Gossiping page, you would see that you need to go through the age verification before you can enter the content page. So, our first job is to pass through this age verification.

- First, we create an

session()(like we open a browser linking to the page)

gossiping.session <- session(ptt.url)- Second, we extract the age verification form from the current page (

formis also a defined HTML element)

gossiping.form <- gossiping.session %>%

html_node("form") %>%

html_form- Then we automatically submit an

yesto the age verification form in the earlier createdsession()and create another session.

gossiping <- session_submit(

x = gossiping.session,

form = gossiping.form,

submit = "yes"

)

gossiping<session> https://www.ptt.cc/bbs/Gossiping/index.html

Status: 200

Type: text/html; charset=utf-8

Size: 12686Now our html session, i.e., gossiping, should be on the front page of the Gossiping board.

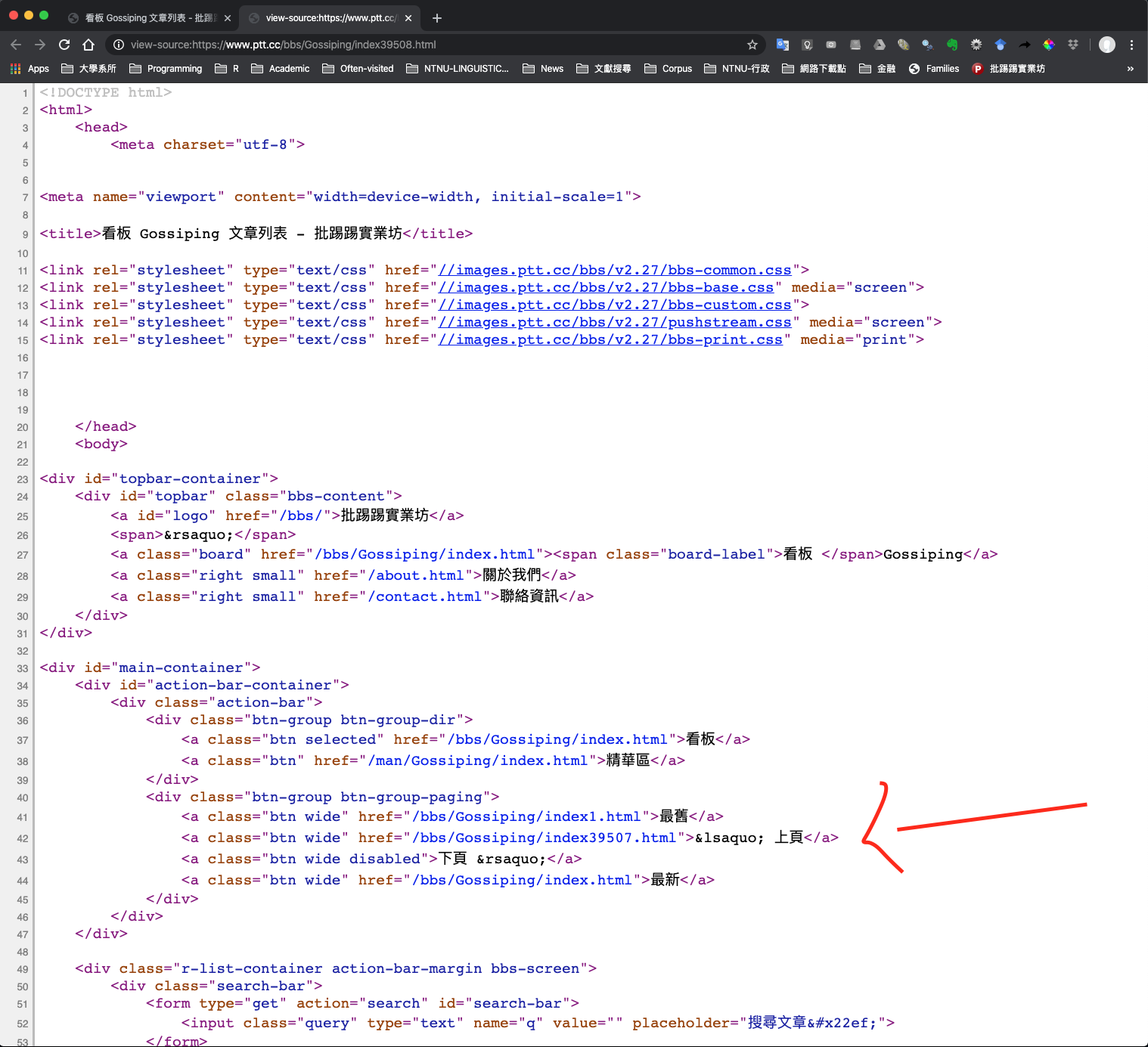

Most browsers come with the functionality to inspect the page source (i.e., HTML). This is very useful for web crawling. Before we scrape data from the webpage, we often need to inspect the structure of the web page first. Most importantly, we need to know (a) which HTML elements, or (b) which particular attributes/values of the HTML elements we are interested in .

- Next we need to find the most recent index page of the board

# Decide the number of index pages ----

page.latest <- gossiping %>%

html_nodes("a") %>% # extract all <a> elements

html_attr("href") %>% # extract the attributes `href`

str_subset("index[0-9]{2,}\\.html") %>% # find the `href` with the index number

str_extract("[0-9]+") %>% # extract the number

as.numeric()

page.latest[1] 39261- On the most recent index page, we need to extract the hyperlinks to the articles

# Retreive links -----

link <- str_c(ptt.url, "/index", page.latest, ".html")

links.article <- gossiping %>%

session_jump_to(link) %>% # move session to the most recent page

html_nodes("a") %>% # extract article <a>

html_attr("href") %>% # extract article <a> `href` attributes

str_subset("[A-z]\\.[0-9]+\\.[A-z]\\.[A-z0-9]+\\.html") %>% # extract links

str_c("https://www.ptt.cc",.)

links.article [1] "https://www.ptt.cc/bbs/Gossiping/M.1678991150.A.B6F.html"

[2] "https://www.ptt.cc/bbs/Gossiping/M.1678991228.A.D7A.html"

[3] "https://www.ptt.cc/bbs/Gossiping/M.1678991426.A.A0C.html"

[4] "https://www.ptt.cc/bbs/Gossiping/M.1678991749.A.B6F.html"

[5] "https://www.ptt.cc/bbs/Gossiping/M.1678991784.A.95D.html"

[6] "https://www.ptt.cc/bbs/Gossiping/M.1678991980.A.2E9.html"

[7] "https://www.ptt.cc/bbs/Gossiping/M.1678992200.A.381.html"

[8] "https://www.ptt.cc/bbs/Gossiping/M.1678992524.A.892.html"

[9] "https://www.ptt.cc/bbs/Gossiping/M.1678992816.A.0A5.html"

[10] "https://www.ptt.cc/bbs/Gossiping/M.1678993242.A.889.html"

[11] "https://www.ptt.cc/bbs/Gossiping/M.1678993610.A.345.html"

[12] "https://www.ptt.cc/bbs/Gossiping/M.1678994380.A.D59.html"

[13] "https://www.ptt.cc/bbs/Gossiping/M.1678994649.A.A44.html"

[14] "https://www.ptt.cc/bbs/Gossiping/M.1678995105.A.715.html"

[15] "https://www.ptt.cc/bbs/Gossiping/M.1678995682.A.92E.html"

[16] "https://www.ptt.cc/bbs/Gossiping/M.1678996034.A.529.html"

[17] "https://www.ptt.cc/bbs/Gossiping/M.1678997457.A.94F.html"

[18] "https://www.ptt.cc/bbs/Gossiping/M.1678998290.A.CDC.html"- Next step is to scrape texts from each article hyperlink. Let’s consider one link first.

article.url <- links.article[1]

temp.html <- gossiping %>%

session_jump_to(article.url) # link to the article- Now the

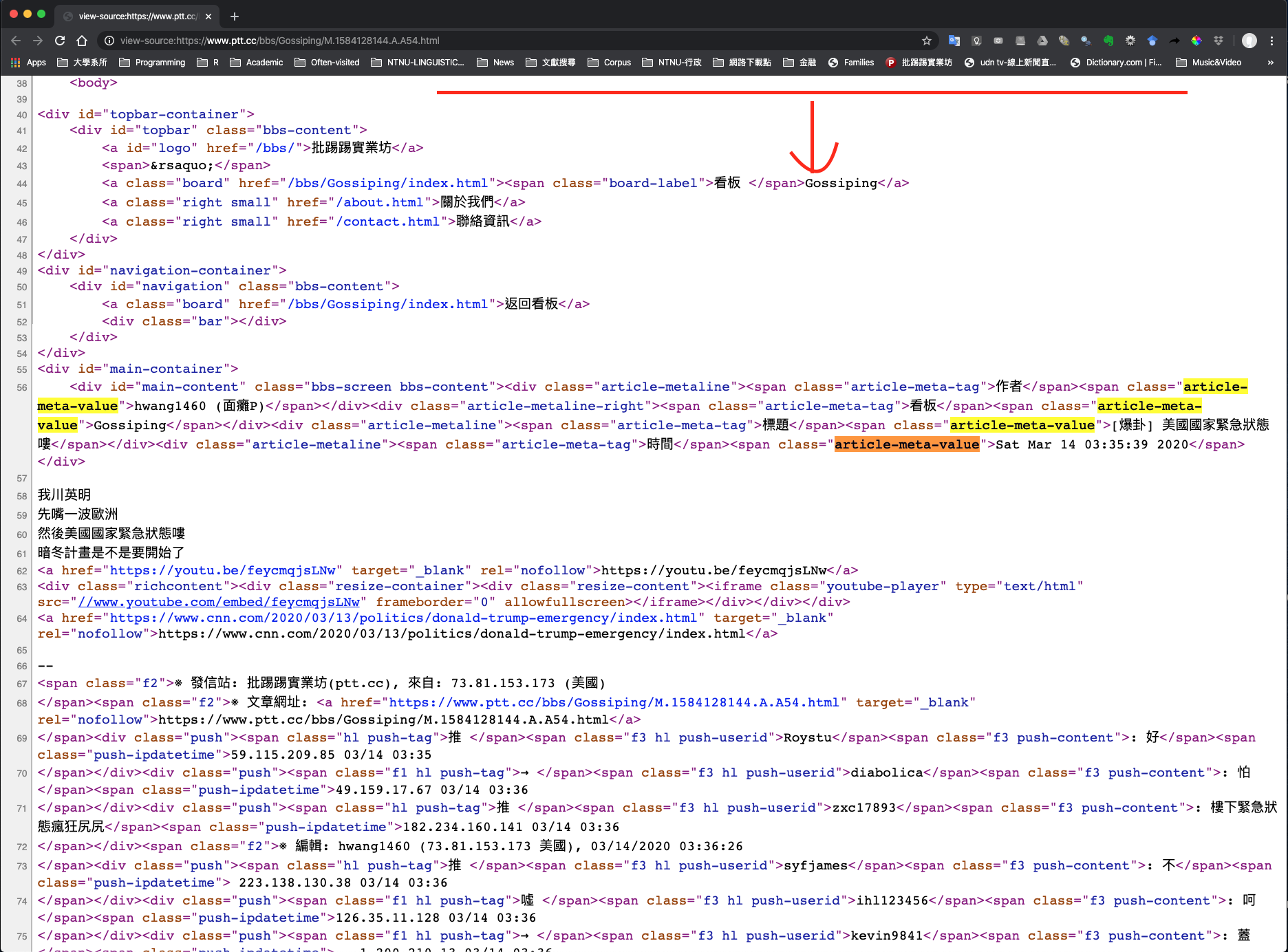

temp.htmlis like a browser on the article page. Because we are interested in the metadata and the contents of each article, now the question is: where are they in the HTML? We need to go back to the source page of the article HTML again:

After a closer inspection of the article HTML, we know that:

- The metadata of the article are included in

<span>tag elements, belonging to the classclass="article-meta-value" - The contents of the article are included in the

<div>element, whose ID isID="main-content"

- The metadata of the article are included in

- Now we are ready to extract the metadata of the article.

# Extract article metadata

article.header <- temp.html %>%

html_nodes("span.article-meta-value") %>% # get <span> of a particular class

html_text()

article.header[1] "Dinenger (低能兒)" "Gossiping"

[3] "[問卦] 幹嘛一直攻擊黑美人魚的長相?" "Fri Mar 17 02:25:48 2023" The metadata of each PTT article in fact includes four pieces of information: author, board name, title, post time. The above code retrieves directly the values of these metadata.

We can retrieve the tags of these metadata values as well:

temp.html %>%

html_nodes("span.article-meta-tag") %>% # get <span> of a particular class

html_text()[1] "作者" "看板" "標題" "時間"- From the

article.header, we are able to extract theauthor,title, andtime stampof the article.

article.author <- article.header[1] %>% str_extract("^[A-z0-9_]+") # athuor

article.title <- article.header[3] # title

article.datetime <- article.header[4] # time stamp

article.author[1] "Dinenger"article.title[1] "[問卦] 幹嘛一直攻擊黑美人魚的長相?"article.datetime[1] "Fri Mar 17 02:25:48 2023"- Now we extract the main contents of the article

article.content <- temp.html %>%

html_nodes( # article body

xpath = '//div[@id="main-content"]/node()[not(self::div|self::span[@class="f2"])]'

) %>%

html_text(trim = TRUE) %>% # extract texts

str_c(collapse = "") # combine all lines into one

article.content[1] "她長這樣也不是她願意的\n她只是想演戲\n然後選角被選上了\n\n她沒有犯錯\n醜不是罪\n\n在此呼籲各位不要這麼卑劣\n\n身為一個演員\n我們應當專注在她的演技上\n而不是長相\n這才是對演員最大的尊重\n\n如果你們喜歡看帥哥美女\n請去看大陸流量明星拍的糞片就好了\n\n不要侮辱電影\n去看你們的卡通吧\n\n懂了嗎?\n\n--"

XPath (or XML Path Language) is a query language which

is useful for addressing and extracting particular elements from

XML/HTML documents. XPath allows you to exploit more features of the

hierarchical tree that an HTML file represents in locating the relevant

HTML elements. For more information, please see Munzert et al. (2014), Chapter 4.

In the above example, the XPath identifies the nodes

under <div id = “main-content”>, but

excludes sister nodes that are <div> or

<span class=“f2”>.

These children <div> or

<span class=“f2”> of the

<div id = “main-content”> include the push comments

(推文) of the article, which are not the main content of the

article.

- Now we can combine all information related to the article into a data frame

article.table <- tibble(

datetime = article.datetime,

title = article.title,

author = article.author,

content = article.content,

url = article.url

)

article.table- Next we extract the push comments at the end of the article

article.push <- temp.html %>%

html_nodes(xpath = "//div[@class = 'push']")

article.push{xml_nodeset (35)}

[1] <div class="push">\n<span class="f1 hl push-tag">→ </span><span class="f ...

[2] <div class="push">\n<span class="hl push-tag">推 </span><span class="f3 h ...

[3] <div class="push">\n<span class="hl push-tag">推 </span><span class="f3 h ...

[4] <div class="push">\n<span class="f1 hl push-tag">→ </span><span class="f ...

[5] <div class="push">\n<span class="f1 hl push-tag">→ </span><span class="f ...

[6] <div class="push">\n<span class="f1 hl push-tag">→ </span><span class="f ...

[7] <div class="push">\n<span class="f1 hl push-tag">噓 </span><span class="f ...

[8] <div class="push">\n<span class="f1 hl push-tag">→ </span><span class="f ...

[9] <div class="push">\n<span class="hl push-tag">推 </span><span class="f3 h ...

[10] <div class="push">\n<span class="f1 hl push-tag">→ </span><span class="f ...

[11] <div class="push">\n<span class="f1 hl push-tag">→ </span><span class="f ...

[12] <div class="push">\n<span class="hl push-tag">推 </span><span class="f3 h ...

[13] <div class="push">\n<span class="f1 hl push-tag">噓 </span><span class="f ...

[14] <div class="push">\n<span class="f1 hl push-tag">→ </span><span class="f ...

[15] <div class="push">\n<span class="f1 hl push-tag">→ </span><span class="f ...

[16] <div class="push">\n<span class="f1 hl push-tag">→ </span><span class="f ...

[17] <div class="push">\n<span class="f1 hl push-tag">→ </span><span class="f ...

[18] <div class="push">\n<span class="f1 hl push-tag">→ </span><span class="f ...

[19] <div class="push">\n<span class="f1 hl push-tag">噓 </span><span class="f ...

[20] <div class="push">\n<span class="hl push-tag">推 </span><span class="f3 h ...

...We then extract relevant information from each push nodes

article.push.- push types

- push authors

- push contents

# push tags

push.table.tag <- article.push %>%

html_nodes("span.push-tag") %>%

html_text(trim = TRUE) # push types (like or dislike)

push.table.tag [1] "→" "推" "推" "→" "→" "→" "噓" "→" "推" "→" "→" "推" "噓" "→" "→"

[16] "→" "→" "→" "噓" "推" "→" "推" "→" "推" "→" "→" "→" "→" "噓" "→"

[31] "推" "→" "→" "→" "→" # push authors

push.table.author <- article.push %>%

html_nodes("span.push-userid") %>%

html_text(trim = TRUE) # author

push.table.author [1] "twdvdr" "heavensun" "whitenoise" "whitenoise" "heavensun"

[6] "key555102" "Zyphyr" "key555102" "zxciop741" "heavensun"

[11] "zxciop741" "Takhisis" "ju851996" "VL1003" "VL1003"

[16] "VL1003" "VL1003" "VL1003" "dreamfree" "GOD5566"

[21] "GOD5566" "saphx3321" "Dinenger" "saphx3321" "saphx3321"

[26] "saphx3321" "saphx3321" "GEoilo" "Carters1109" "Carters1109"

[31] "sellgd" "bookseasy" "bookseasy" "bookseasy" "soilndger" # push contents

push.table.content <- article.push %>%

html_nodes("span.push-content") %>%

html_text(trim = TRUE)

push.table.content [1] ": 沒人說他丑只有你吧 大家只是覺得政治過頭"

[2] ": 9一群搞不清楚狀況的 要罵是罵高層"

[3] ": 不見得是在嫌她外貌,有的人只是希望"

[4] ": 能終於原著"

[5] ": 迪士尼高層的作法 是傷害 創作自由"

[6] ": 真的只是政治過度 黑人白人不是問題"

[7] ": https://i.imgur.com/GNJ6R90.jpg"

[8] ": 換作泰山真人版 你看他們敢找黑人?"

[9] ": 她是有背景靠關係才能拿到這角色的"

[10] ": 政治道德嘞索 導演 編劇不敢自由創作"

[11] ": 如果是透過自身努力真的沒人會嘴"

[12] ": 有選角就好惹對吧碧昂絲?"

[13] ": 醜要有自知 出來嚇人就是她的問題"

[14] ": 理論上腳本在接角色前就知道,這種跟原作"

[15] ": 差異大到誇張的,要接就是會有被酸爛的風"

[16] ": 險,就像如果拍孔子傳記,去找外國人演孔"

[17] ": 子,你覺得這能不被酸爛嗎? 根本不該接"

[18] ": 攻擊長相倒還好,主要是政治正確過頭太噁"

[19] ": 怎麼會覺得會有人去看這部.."

[20] ": 蠻中肯的 還有人花式合理化自己霸凌別人"

[21] ": 長相的行為=="

[22] ": https://i.imgur.com/H7C0bAk.jpg"

[23] ": 我抗議 AI抄襲我的言論"

[24] ": 它一開始是其實是嗆你的 什麼 唉唉唉"

[25] ": 看來這邊有個親小黑美人魚的三小的 太"

[26] ": 好笑 正要截圖 按到刷新 我重新問就變"

[27] ": 正常回答了 可惜"

[28] ": 有本事就翻拍索多瑪一百二十天通通找黑人"

[29] ": 當演員外表本來就會被檢視啊 何來霸"

[30] ": 凌之說?"

[31] ": 本來以為你要反串 結果一點梗都沒有"

[32] ": 人設超越肥宅小時候對迪士尼卡通認知"

[33] ": ,完全跟原作不一樣。真的會幻想破滅"

[34] ": ,無法理解。迪士尼別再惡搞原作了。"

[35] ": 酸民無所不酸 不分國際" # push time

push.table.datetime <- article.push %>%

html_nodes("span.push-ipdatetime") %>%

html_text(trim = TRUE) # push time stamp

push.table.datetime [1] "36.224.240.145 03/17 02:27" "125.228.79.97 03/17 02:27"

[3] "223.139.169.158 03/17 02:27" "223.139.169.158 03/17 02:27"

[5] "125.228.79.97 03/17 02:27" "27.51.72.73 03/17 02:28"

[7] "205.175.106.158 03/17 02:28" "27.51.72.73 03/17 02:28"

[9] "106.1.229.100 03/17 02:28" "125.228.79.97 03/17 02:28"

[11] "106.1.229.100 03/17 02:28" "114.136.176.198 03/17 02:30"

[13] "49.216.53.175 03/17 02:30" "1.162.17.123 03/17 02:36"

[15] "1.162.17.123 03/17 02:37" "1.162.17.123 03/17 02:37"

[17] "1.162.17.123 03/17 02:37" "1.162.17.123 03/17 02:38"

[19] "61.230.89.154 03/17 02:39" "180.217.247.101 03/17 02:40"

[21] "180.217.247.101 03/17 02:40" "36.229.110.38 03/17 02:50"

[23] "110.30.40.182 03/17 02:51" "36.229.110.38 03/17 02:56"

[25] "36.229.110.38 03/17 02:56" "36.229.110.38 03/17 02:56"

[27] "36.229.110.38 03/17 02:56" "111.251.102.90 03/17 03:19"

[29] "220.138.220.167 03/17 03:21" "220.138.220.167 03/17 03:21"

[31] "203.222.13.126 03/17 03:42" "123.193.229.85 03/17 03:44"

[33] "123.193.229.85 03/17 03:44" "123.193.229.85 03/17 03:44"

[35] "111.255.197.161 03/17 04:05"- Finally, we combine all into one Push data frame.

push.table <- tibble(

tag = push.table.tag,

author = push.table.author,

content = push.table.content,

datetime = push.table.datetime,

url = article.url)

push.table3.3 Functional Programming

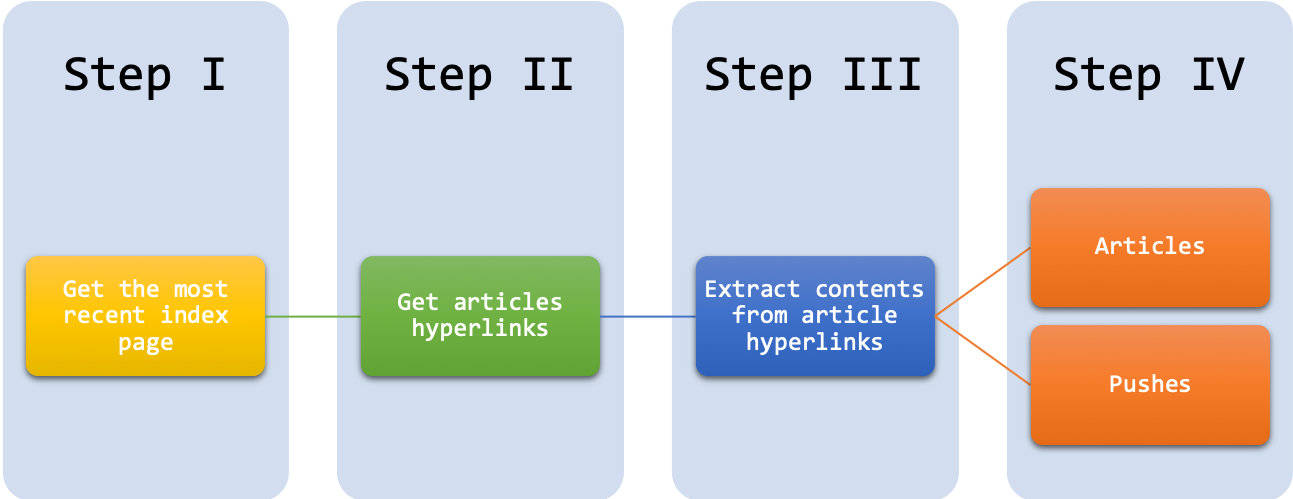

It should now be clear that there are several routines that we need to do again and again if we want to collect text data in large amounts:

- For each index page, we need to extract all the article hyperlinks of the page.

- For each article hyperlink, we need to extract the article content, metadata, and the push comments.

So, it would be great if we can wrap these two routines into two functions.

3.3.1 extract_art_links()

extract_art_links(): This function takes an HTML sessionsessionand an index page of the PTT Gossipingindex_pageas the arguments and extract all article links from the index page. It returns a vector of article links.

extract_art_links <- function(index_page, session){

links.article <- session %>%

session_jump_to(index_page) %>%

html_nodes("a") %>%

html_attr("href") %>%

str_subset("[A-z]\\.[0-9]+\\.[A-z]\\.[A-z0-9]+\\.html") %>%

str_c("https://www.ptt.cc",.)

return(links.article)

}For example, we can extract all the article links from the most recent index page:

# Get index page

cur_index_page <- str_c(ptt.url, "/index", page.latest, ".html")

# Get all article links from the most recent index page

cur_art_links <-extract_art_links(cur_index_page, gossiping)

cur_art_links [1] "https://www.ptt.cc/bbs/Gossiping/M.1678991150.A.B6F.html"

[2] "https://www.ptt.cc/bbs/Gossiping/M.1678991228.A.D7A.html"

[3] "https://www.ptt.cc/bbs/Gossiping/M.1678991426.A.A0C.html"

[4] "https://www.ptt.cc/bbs/Gossiping/M.1678991749.A.B6F.html"

[5] "https://www.ptt.cc/bbs/Gossiping/M.1678991784.A.95D.html"

[6] "https://www.ptt.cc/bbs/Gossiping/M.1678991980.A.2E9.html"

[7] "https://www.ptt.cc/bbs/Gossiping/M.1678992200.A.381.html"

[8] "https://www.ptt.cc/bbs/Gossiping/M.1678992524.A.892.html"

[9] "https://www.ptt.cc/bbs/Gossiping/M.1678992816.A.0A5.html"

[10] "https://www.ptt.cc/bbs/Gossiping/M.1678993242.A.889.html"

[11] "https://www.ptt.cc/bbs/Gossiping/M.1678993610.A.345.html"

[12] "https://www.ptt.cc/bbs/Gossiping/M.1678994380.A.D59.html"

[13] "https://www.ptt.cc/bbs/Gossiping/M.1678994649.A.A44.html"

[14] "https://www.ptt.cc/bbs/Gossiping/M.1678995105.A.715.html"

[15] "https://www.ptt.cc/bbs/Gossiping/M.1678995682.A.92E.html"

[16] "https://www.ptt.cc/bbs/Gossiping/M.1678996034.A.529.html"

[17] "https://www.ptt.cc/bbs/Gossiping/M.1678997457.A.94F.html"

[18] "https://www.ptt.cc/bbs/Gossiping/M.1678998290.A.CDC.html"3.3.2 extract_article_push_tables()

extract_article_push_tables(): This function takes an article linklinkas the argument and extracts the metadata, textual contents, and pushes of the article. It returns a list of two elements—article and push data frames.

extract_article_push_tables <- function(link){

article.url <- link

temp.html <- gossiping %>% session_jump_to(article.url) # link to the www

# article header

article.header <- temp.html %>%

html_nodes("span.article-meta-value") %>% # meta info regarding the article

html_text()

# article meta

article.author <- article.header[1] %>% str_extract("^[A-z0-9_]+") # athuor

article.title <- article.header[3] # title

article.datetime <- article.header[4] # time stamp

# article content

article.content <- temp.html %>%

html_nodes( # article body

xpath = '//div[@id="main-content"]/node()[not(self::div|self::span[@class="f2"])]'

) %>%

html_text(trim = TRUE) %>%

str_c(collapse = "")

# Merge article table

article.table <- tibble(

datetime = article.datetime,

title = article.title,

author = article.author,

content = article.content,

url = article.url

)

# push nodes

article.push <- temp.html %>%

html_nodes(xpath = "//div[@class = 'push']") # extracting pushes

# NOTE: If CSS is used, div.push does a lazy match (extracting div.push.... also)

# push tags

push.table.tag <- article.push %>%

html_nodes("span.push-tag") %>%

html_text(trim = TRUE) # push types (like or dislike)

# push author id

push.table.author <- article.push %>%

html_nodes("span.push-userid") %>%

html_text(trim = TRUE) # author

# push content

push.table.content <- article.push %>%

html_nodes("span.push-content") %>%

html_text(trim = TRUE)

# push datetime

push.table.datetime <- article.push %>%

html_nodes("span.push-ipdatetime") %>%

html_text(trim = TRUE) # push time stamp

# merge push table

push.table <- tibble(

tag = push.table.tag,

author = push.table.author,

content = push.table.content,

datetime = push.table.datetime,

url = article.url

)

# return

return(list(article.table = article.table,

push.table = push.table))

}#endfuncFor example, we can get the article and push tables from the first article link:

extract_article_push_tables(cur_art_links[1])$article.table

# A tibble: 1 × 5

datetime title author content url

<chr> <chr> <chr> <chr> <chr>

1 Fri Mar 17 02:25:48 2023 [問卦] 幹嘛一直攻擊黑美人魚的長… Dinen… "她長… http…

$push.table

# A tibble: 35 × 5

tag author content datetime url

<chr> <chr> <chr> <chr> <chr>

1 → twdvdr : 沒人說他丑只有你吧 大家只是覺得政治過頭 36.224.240.… http…

2 推 heavensun : 9一群搞不清楚狀況的 要罵是罵高層 125.228.79.… http…

3 推 whitenoise : 不見得是在嫌她外貌,有的人只是希望 223.139.169… http…

4 → whitenoise : 能終於原著 223.139.169… http…

5 → heavensun : 迪士尼高層的作法 是傷害 創作自由 125.228.79.… http…

6 → key555102 : 真的只是政治過度 黑人白人不是問題 27.51.72.73… http…

7 噓 Zyphyr : https://i.imgur.com/GNJ6R90.jpg 205.175.106… http…

8 → key555102 : 換作泰山真人版 你看他們敢找黑人? 27.51.72.73… http…

9 推 zxciop741 : 她是有背景靠關係才能拿到這角色的 106.1.229.1… http…

10 → heavensun : 政治道德嘞索 導演 編劇不敢自由創作 125.228.79.… http…

# … with 25 more rows3.3.3 Streamline the Codes

Now we can simplify our codes quite a bit:

# Get index page

cur_index_page <- str_c(ptt.url, "/index", page.latest, ".html")

# Scrape all article.tables and push.tables from each article hyperlink

cur_index_page %>%

extract_art_links(session = gossiping) %>%

map(extract_article_push_tables) -> ptt_data# number of articles on this index page

length(ptt_data)[1] 18# Check the first contents of 1st hyperlink

ptt_data[[1]]$article.tableptt_data[[1]]$push.table- Finally, the last thing we can do is to combine all article tables from each index page into one; and all push tables into one for later analysis.

# Merge all article.tables into one

article.table.all <- ptt_data %>%

map(function(x) x$article.table) %>%

bind_rows

# Merge all push.tables into one

push.table.all <- ptt_data %>%

map(function(x) x$push.table) %>%

bind_rows

article.table.allpush.table.all

There is still one problem with the Push data frame. Right now it is

still not very clear how we can match the pushes to the articles from

which they were extracted. The only shared index is the

url. It would be better if all the articles in the data

frame have their own unique indices and in the Push data frame each push

comment corresponds to a particular article index.

The following graph summarizes our work flowchart for PTT Gossipping Scraping:

3.4 Save Corpus

You can easily save your scraped texts in a CSV format.

# Save ------

write_csv(article.table, path = "PTT_GOSSIPPING_ARTICLE.csv")

write_csv(push.table, path = "PTT_GOSSIPPING_PUSH.csv")3.5 Additional Resources

Collecting texts and digitizing them into machine-readable files is only the initial step for corpus construction. There are many other things that need to be considered to ensure the effectiveness and the sustainability of the corpus data. In particular, I would like to point you to a very useful resource, Developing Linguistic Corpora: A Guide to Good Practice, compiled by Martin Wynne. Other important issues in corpus creation include:

- Adding linguistic annotations to the corpus data (cf. Leech’s Chapter 2)

- Metadata representation of the documents (cf. Burnard’s Chapter 4)

- Spoken corpora (cf. Thompson’s Chapter 5)

- Technical parts for corpus creation (cf. Sinclair’s Appendix)

3.6 Final Remarks

- Please pay attention to the ethical aspects involved in the process of web crawling (esp. with personal private matters).

- If the website has their own API built specifically for one to gather data, use it instead of scraping.

- Always read the terms and conditions provided by the website regarding data gathering.

- Always be gentle with the data scraping (e.g., off-peak hours, spacing out the requests)

- Value the data you gather and treat the data with respect.

Exercise 3.1 Can you modify the R codes so that the script can automatically scrape more than one index page?

Exercise 3.2 Please utilize the code from Exercise 3.1 and collect all texts on PTT/Gossipings from 3 index pages. Please have the articles saved in PTT_GOSSIPING_ARTICLE.csv and the pushes saved in PTT_GOSSIPING_PUSH.csv under your working directory.

Also, at the end of your code, please also output in the Console the corpus size, including both the articles and the pushes. Please provide the total number of characters of all your PTT text data collected (Note: You DO NOT have to do the word segmentation yet. Please use the characters as the base unit for corpus size.)

Hint: nchar()

Your script may look something like:

# I define my own `scrapePTT()` function:

# ptt_url: specify the board to scrape texts from

# num_index_page: specify the number of index pages to be scraped

# return: list(article, push)

PTT_data <-scrapePTT(ptt_url = "https://www.ptt.cc/bbs/Gossiping", num_index_page = 3)

PTT_data$article %>% headPTT_data$push %>% head# corpus size

PTT_data$article$content %>% nchar %>% sum[1] 29207Exercise 3.3 Please choose a website (other than PTT) you are interested in and demonstrate how you can use R to retrieve textual data from the site. The final scraped text collection could be from only one static web page. The purpose of this exercise is to show that you know how to parse the HTML structure of the web page and retrieve the data you need from the website.